basilh@virginia.edu

@basilhalperin

This fall was my first time teaching – one class, undergrad intermediate macro, with 60 students.

(While I did many stints as a teaching assistant during grad school, being the professor I think is a bit of a different beast. You’re ultimately responsible!, and you have to hold a lot more in your head at once. There’s a lot more that could be said – and that I hope to say in the future – on the experience of teaching, and also on how I taught intermediate macro in particular. But that’s not today’s post.)

Before the start of the semester, I decided to put together a list of “principles of teaching” to try to guide my teaching efforts. Because teaching is such a high-dimensional design space – when you start teaching, you are really given an extreme, an extraordinary level of freedom to do whatever you want – I wanted some points to anchor to.

Below, I start with some brief thoughts on the goals of teaching. Then, I list the principles for achieving those goals, plus some references/selected quotes that inspired each (the principles mostly drew on the experience of others). I‘ll hide all but the very best quotes below the clickable arrows – but I do recommend opening and reading the quotes.

I think of four goals of teaching:

An alternative framing is that there are two populations when teaching, and I’m aiming for different treatment effects on each:

The extent to which students find themselves in the tail versus in the mass is of course endogenous – aiming to inspire is important for affecting this!

0. Have extreme empathy. Honestly, I think quite literally everything is downstream from this.

1. Grading should be predictable. This is a boring one to start – it’s not about actual learning – but of course, from the perspective of the student this is pretty central.

2. Enthusiasm matters! Show that you care – channel your enjoyment! This reflects both that teaching is an entertainment service; and it reflects the goal of inspiration. Tyler Cowen puts this fantastically well:

3. Actively solicit feedback. This is one of those things that is obvious but maybe not obvious if you’re not intentional about it.

(i) David Cutler: “Whenever students talk to me outside of class, I always ask them how the course is going.”

(ii) Besides the benefits for teaching itself, students themselves directly value the opportunity to have voice.

(iii) Obviously, receiving feedback may be painful; and feedback may be actively wrong/unhelpful (or even worse!). But perseverance here seems important.

4. Teaching is hard because of the curse of knowledge. After spending 10,000 hours thinking about a topic, it requires intentional, costly effort to put yourself back in the shoes of someone seeing something for the first time.

5. Teach in multiple ways: target different parts of the distribution of students + recognize that the distribution is multidimensional.

6. Keep it simple – but that doesn’t mean easy: teach fewer things but teach them more deeply. (This principle is not universal to all courses.)

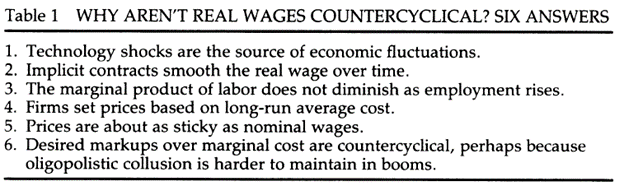

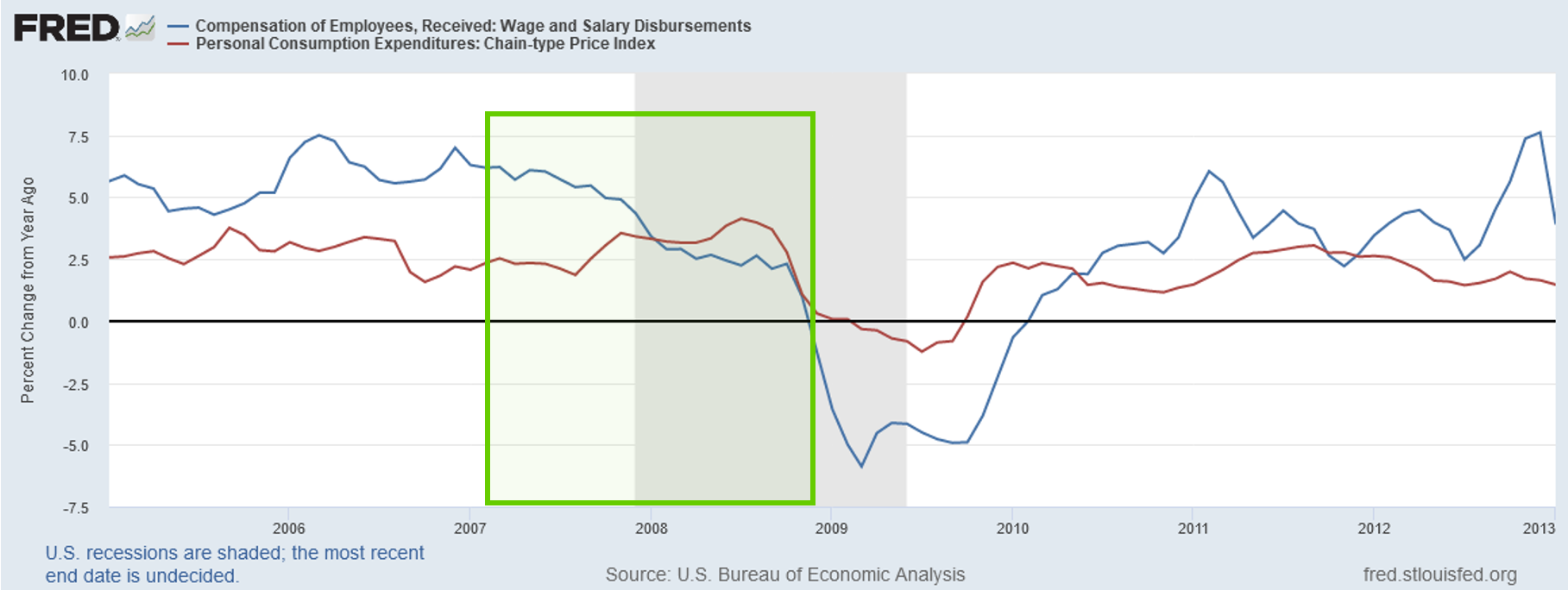

7. Each and every theory must be presented back by empirical evidence, not passed down as wisdom of the ancients.

8. Inoculation is an important part of the job: against appealing-but-wrong ways of thinking; and against popular-but-wrong “facts”/memes.

9. It’s okay or may even be good if learning feels painful.

10. Repetition is important (especially for undergrads). Backtracking at the start of class is a good way to achieve this.

11. Consistency is important, e.g. in notation and terminology.

12. Teaching facts and encouraging memorization of facts is underrated.

13. Simple, decisive empirical moments are both more memorable and plausibly more important evidence than fancy complicated evidence.

14. “See the other side”: help students understand the perspective of other students.

15. Considering extreme cases is usefully clarifying.

16. Rapid feedback is important.

17. Ensuring students get reps in is important.

18. Cold calling is useful. Three reasons: (1) it incentivizes active learning; (2) it keeps energy high; (3) it allows me to check understanding.

19. Connecting to current events is useful.

20. Teaching centered on questions may be useful.

21. Sitting in on colleagues’ lectures for the same course is useful (h/t Luke Stein).

(I was not fantastic about principles 16-21 this semester.)

22. Teaching dialectically and showing the history of thought is useful.

23. Get student buy-in on the electronics policy: I like Justin Wolfer’s approach.

24. Send encouraging emails at the end of the semester to relevant students (h/t a deleted tweet from Kathryn Paige Harden).

25. Smile (see principle #2).

Brief thoughts, very relevant for teaching economics, especially to undergrads of heterogeneous ability:

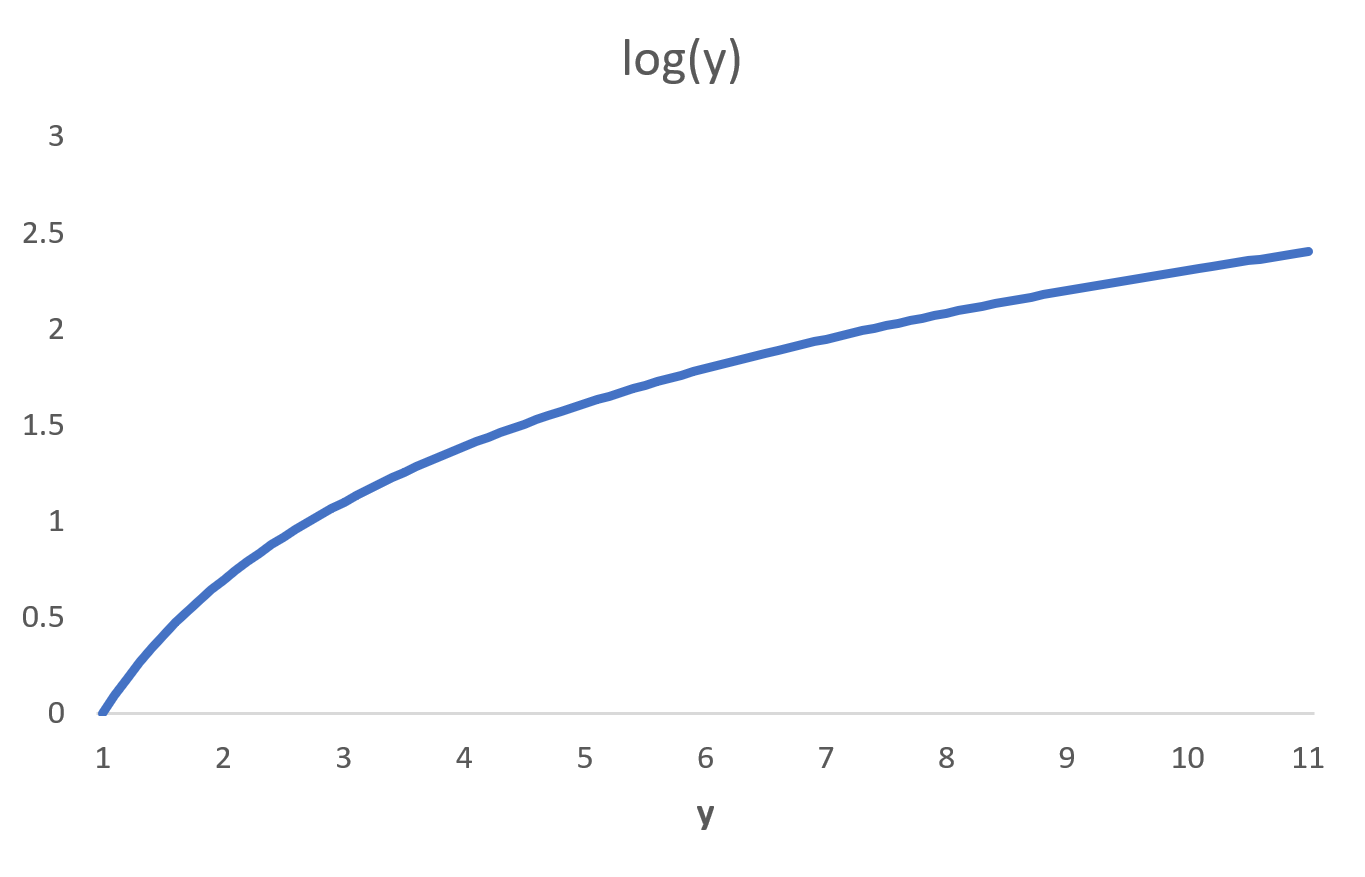

(1). The use of math and formal models is an act of intellectual humility.

(2). Models are intuition pumps: “All models are wrong, but some are useful”.

(3). “Burn the mathematics” [Marshall]: math forces you to be precise and internally consistent – but it’s important to always translate back and be in dialogue with economic intuition.

(4). Be cautious about the mapping between math and reality.

(5). Formal proof is the last step of mathematical understanding.

As a grad student, when TAing for the first time, I liked reading The Heart of Teaching Economics. Simon Bowmaker interviews a set of well-known economists and asks them about how they teach. Bowmaker has also posted many of these interviews for free on his Twitter – a handful are linked with the quotes above.

Originally posted as a Twitter thread.

How much of Chinese growth is *TFP growth* vs ‘merely’ Solow catch-up growth?

A mini lit review, for the purpose of attracting real experts to correct my errors 🤠

The natural background to the China question is the lit on the rapid growth of Japan + the East Asian Tigers (Hong Kong, Singapore, South Korea, Taiwan), on which I recently gave an opinionated summary

Something like half of East Asian Tiger growth was from TFP growth – call it 3% per year

As context, US TFP growth over this period was ˜1%. So 3% is pretty fast!

=> Tiger growth seems to have been not just Solow-style catch-up growth (again, see previous thread)

Okay, well what about China?

How much of Chinese growth has been from actually producing things more intelligently, ie TFP growth, versus just “adding more machines to the economy, shuttling more kids through school”?

Again starting with Alwyn Young, the 2003 paper (“Gold Into Base Metals”, his amazing trademark deadpan):

He estimates 1.4% TFP growth 1978-1998 – not that high, but still accounting for ˜75% of [non-ag] growth (!)

(the “Adjusted” column uses an alternative GDP deflator)

Aside: in this paper Young switches from the traditional growth accounting method (used in eg Young 1995) to the adjusted (“Harrodian”) method I described – which properly attributes the growth caused by capital accumulation induced by higher TFP, to TFP

Brandt and Zhu (2010) revise Young’s estimate even further up – with updated data and correcting the deflator. Zhu (2012, JEP) is a very readable summary, showing:

From 1978-2007, TFP growth averaged 3.2% per year (!!) – again accounting for more 3/4 of GDPpc growth

You can also see in that^ table an accounting for growth under Mao, 1952-1978: 3% growth in GDP per capita [on avg 💀], entirely due to K+H accumulation – annual TFP growth was -1%!!

If I had to recommend one paper here, it would be this Zhu (2010) paper

Will also note – Cheremukhin, Golosov, Guriev, and Tsyvinski (2015, 2024) do an interesting wedge accounting of the Chinese economy, mostly discussing pre-reform but also briefly post-reform. I’m not sure if their results match the rest of this thread

Other growth accounting papers covering the period up to 2008 also show 50-75% of Chinese growth is accounted for by TFP growth (Bosworth and Collins 2008; Zheng, Bigsten, and Hu 2009; Rajah and Leng (2022))

Ok, well that was part 1: TFP growth in China was high 1978-2007, accounting for 75% of Chinese growth over the period (after being quite negative under Mao)

Part 2 is: TFP growth has slowed down, a lot, since 2008 and the Great Recession

I haven’t been able to find any fantastic papers doing a growth accounting for the last decade (please send!!) and have been unwilling to wade into the data myself beyond the offensively crude cut I did here

BUT all the available evidence points to a major slowdown in Chinese TFP growth since 2008:

Brandt et al (2020) estimates a pre-2008 ten-year annual TFP growth of 2.8%

vs.

Post-2008 ten-year annual TFP growth of 0.7%

Rajah and Leng (2022) do a classic growth accounting decomposition, and also find TFP growth slowing substantially in the 2010s

Plausibly this slowdown is due in part to distortions caused by the policies enacted to avoid the global Great Recession?

Again, I would love to read more analysis of the Chinese TFP slowdown of the last 15 years / the Xi Jinping economy, please send links 😀

Ok, so:

1. Part 1 was that Chinese TFP growth was high after the reform & opening up

2. Part 2 was that TFP growth slowed substantially post-2008

What’s the future of Chinese growth?

The fundamental fact is that Chinese TFP is still far below that of the US: there is a lot of room left to run. China could still grow a lot. Obviously, the question is: will it?

Zhu (2012) points out: in the ˜30 years post reform and opening up, China went from 3% of US TFP to 13% (in 2007)

In comparison:

Japan TFP 1950: 56% of US -> 83%

Korea TFP 1965: 43% of US -> 63%

Taiwan TFP 1950: 50% of US -> 80%

Again: China has a lot of room left to run...

Here’s my quick and liable-to-be-incorrect figure showing “Chinese productivity has a lot of room left to grow”:

Plotting the Y/L trajectory for major East Asian economies vs. TFP – data simply pulled from PWT. Chinese TFP is low

(That same PWT data, though, also shows Chinese TFP being flat relative to the US over the last 60 years, which contradicts the main thesis of this thread, so...

¯\_(ツ)_/¯)

(I would assume the PWT TFP estimate quality <<< the quality of the cited papers)

Anyway –

The best analysis that I could find on projecting future Chinese growth [modulo the singularity etc] is Rajah and Leng (2022), which uses a neoclassical framework

Rajah and Leng (2022) assume a continued gradual reduction in Chinese TFP growth until convergence to frontier TFP growth rates

Between a TFP slowdown, an aging population, and declining {urban population, housing, public investment} growth –

They forecast 3% GDP growth by 2030

An even more direct approach to projecting future Chinese growth is Fernandez-Villaverde, Ohanian, and Yao (2023), where TFP growth is projected statistically

The idea is: China’s growth path has followed shockingly closely to other East Asian miracles. What if that continues:

Again, additional references/corrections welcome

These threads brought to you by some binge reading before a big trip to China last month. Cold War II sucks but at least we’ve still got growth accounting 👍So that’s my read of the China growth accounting lit:

1. TFP growth was fast + was an important part of the miraculous decades of growth, which rejects crude Solow-ism

2. TFP growth has slowed down since 2008

3. There is a lot of room left that the PRC could run

Some bonus China economy charts from my lit binge:

1) China is spending more on R&D than you would expect based on GDP per capita

(my update of a chart from Wei, Xie, and Zhang 2017)

2) Consensus long-term China real GDP growth forecasts:

3) Chinese public investment has been higher than other East Asian miracles over the whole development trajectory (Rajah and Leng 2022):

4) I haven’t looked closely into these TFP charts but they are interesting and plausibly more accurate than my similar quick and dirty one: (source 1, source 2

5) The Chinese economy is shifting towards services; and this trajectory is surprisingly predictable across countries (Chen, Pei, Song, and Zilibotti 2023)

[primary = agriculture; secondary = manufacturing; tertiary = services]

Bonus book recommendation: Yasheng Huang

Will hopefully do a thread on this but Yasheng Huang’s EAST is probably the best big think book on China I’ve read + great “applied political economy theory”

— Basil Halperin (@BasilHalperin) June 2, 2024

Bonus podcast recommendation: Yasheng Huang interview

Originally posted as a Twitter thread.

I think the Solow/neoclassical model is overrated *for explaining catch-up growth and convergence*

Some thoughts, as a prelude to a thread on Chinese growth accounting:

Let me frame the argument against Solow by appealing to the debate over the rapid growth of East Asian Tigers (Hong Kong, Singapore, South Korea, Taiwan) from 1960-2000:

1. Did the E. Asian Tigers grow fast because of rapid productivity improvement?

vs.

2. Was it merely Solow catch-up growth: poor countries start off with few machines...

...build more (“capital accumulation”)...

...and grow faster until they have enough machines (“convergence”)

Alwyn Young (1992, 1995) famously argued that the rapid growth of the East Asian Tigers was largely not due to productivity growth

Paul Krugman popularized this view in an influential Foreign Affairs article, “The Myth of Asia’s Miracle”, suggesting effectively zero TFP growth

From Young’s legendarily careful data work, it was argued Tiger growth was merely capital accumulation: Solow-style catch up growth

The growth accounting from Young (1995) – “the tyranny of numbers” – shows TFP growth explaining at most 30% of Tiger growth

That is: Hong Kong, Taiwan, S Korea, and especially Singapore simply started in 1960 with few machines and low education

The rapid growth merely came from building more machines and shuttling more kids through school – not from producing things more intelligently (TFP growth)

This Krugman-Young story is precisely the simple story of convergence of Solow!

Again: poor countries start off with few machines, then build more (“capital accumulation”) and so grow faster until they have enough machines (“convergence”)

This is the view of the E. Asian Tiger experience I had accepted, until recently

There are a few issues, with the overriding fact being that: growth accounting is actually just really hard. Empirically tough, but also conceptually

I’ll focus on a conceptual issue (“wonkish”)

Higher TFP causes capital accumulation:

If you get more productive, you want to (gradually) build more machines

=> AND those machines cause more growth

Should we attribute that higher growth to the machines, or to the productivity?

Traditional growth accounting gives credit for growth caused by that extra capital to capital itself

But it really makes more sense to give (long-run) credit to the higher productivity, which is itself causing the extra capital!

Brief Cobb-Douglas math for the curious (in logs):

Traditional growth accounting: Y = A + α⋅K + (1-α)⋅L

Instead: Y – L = (1/(1- α))⋅A + (α/(1-α))⋅(K – Y)

(See eg Klenow-Rodriguez-Clare 1997, or Jones 2016 sec 2.1)

So for example, Klenow and Rodriguez-Clare (1997) reanalyze the East Asian Tiger experience: properly accounting for TFP-induced capital accumulation + analyzing Y/L rather than Y

They find that TFP growth accounts for 50-75% of Tiger growth (!), except for Singapore

Briefly, another critique of the neoclassical (Young/Krugman) view is Hsieh (2002), who argues that the rate of return on capital didn’t fall in Singapore, as the neoclassical/Solow model would predict – implying higher TFP growth. This is the “dual approach” to growth accounting

[PART 2: an alternative view of catch-up growth]

Just from armchair theorizing, the Solow model view that faster catch-up growth is all due to capital accumulation feels a little odd, IMO

Do poor countries really grow fast because they’re just accumulating capital (building more machines, etc), a la Solow? Isn’t that kind of crazy?

A priori, aren’t poor countries also catching up by **importing technology**?

IMO the Solow model is plausibly... overrated 😎

Overrated, since Solow attributes fast catch-up growth entirely to temporarily high capital accumulation – and not at all to higher technological growth

Poor countries also have low productivity! Shouldn’t some of the catch-up growth come from simply imitating tech leaders?

(that^ tweet is the punchline of the thread!)

The first attempt (AFAIK) to model catch-up growth as being caused by technological diffusion, rather than Solow-style capital accumulation, was Barro and Sala-i-Martin (1997). The Acemoglu textbook ch. 18 also has a clear exposition

These models do what you would expect:

“Imitation is cheaper than invention”, so countries behind the frontier can copy ideas cheaply and grow TFP and GDP quickly

As they catch up in terms of TFP, it gets more expensive to copy ideas – you’re trying to imitate semiconductors instead of tractors – and growth falls

In the long run, all countries grow at the rate of the frontier – and the frontier is nicely determined exactly as in the canonical growth models

The framework matches the armchair view of historical catch-up growth – it is driven in part by poor countries getting more productive by copying technology

That said, obviously a lot of catch-up growth is ALSO surely due to Solow-style capital accumulation

A crude extrapolation is 50-50 on average: half productivity catch-up + half capital accumulation catch-up

I haven’t seen a paper doing the decomposition across all catch-up growth episodes (many papers look at all countries/times – mixing in BGP level differences, I think)

Maybe postwar Europe (which surely motivated Solow) – with the rebuilding after the destruction – was catch-up growth driven mostly by capital accumulation, not technology diffusion

(Though maybe not, based on this dirty cut adapted from Dietz Vollrath?)

Recent Vollrath blog seems to show that

— Basil Halperin (@BasilHalperin) March 26, 2023

Postwar European rapid growth was not catch-up growth -- i.e. it wasn't Solow-style capital (re)accumulation -- which I thought was the consensus view

It was TFP growth (?)https://t.co/HsZe9RaEmA pic.twitter.com/e7N6DnVD1o

Convergence is, obviously, a huge topic in the lit and I feel like there must have been more study of this / someone else must have framed the question this way:

“Is catch-up growth due to Solow-style capital accumulation vs. technology diffusion?”

References very welcome!

Originally posted as a Twitter thread.

Miscellaneous things I learned in [econ] grad school:

1. The returns to experience are high(er than I thought)

- Someone who has studied a single topic for a decade or two or three really does know a LOT about that topic

One way you can see this: the way experienced faculty have seemingly photographic memories of subsections of papers and interactions in seminars from years ago --

In the same way that experienced NBA/chess players can have photographic memories of games

2. Even in the 6-year PhD, you can see slow-growing returns to experience. This feels weird!

Eg only after years of seminars did I begin to feel attending was ever worth the time (compared to just reading the paper). The brain sometimes needs LOTS of training data to rewire

3. All that said though, youth/fresh eyes are still crazy powerful for "thinking about things" ie research

- It's bonkers that a 20-year-old can bring insight to a topic studied for ***decades*** by others

4. It's hard to keep learning new things while doing research

While doing research, it feels like there is a temptation to just grind on the research, and not continually invest in learning new things. Tough balance 🙃

The pressure is to *produce now*, which can lead to myopic underinvestment in human capital

"Fail[ing] to continue to plant the little acorns from which the mighty oak trees grow", as Hamming put it. Extremely real!

On the experience of grad school:

5. It's more like high school than undergrad: it's a smaller environment, everyone knows each other for years; it can feel like you're less in control of your fate (imho)

6. Or: grad school is more like being unemployed than it is like being employed. The amount of free time is nuts and it drives people mad

Unstructured time can really screw with people's heads. In that respect, grad school is more like unemployment than high school for a lot of people.

— Cameron Harwick 👾🏛 (@C_Harwick) October 28, 2020

7. Academia in general has fractal complexity (this is true of many careers of course): in a lot of ways, the more you learn about what it's like, the more you realize you don't know

(🙃)

8. For example -- this was embarrassingly not clear to me before starting the PhD; trigger warning: naivete -- the classes are 90%+ about teching up, not about discussion

Or:

9. At the frontier, it is not clear whether (written) papers or (oral) seminars matter more...

From the outside, you only see the papers -- it looks like academia consists of the papers. On the inside, it's clear there's less reading and the seminars matter a lot

10. In general, academia/grad school is an oral culture (i.e., not a written culture), and it pays to just talk with people to share information

You can overinvest, but taking the break from research to get coffee with friends and just blab has high EV, in my experience

11. Relatedly: everyone warns you about spending too much time being overly strategic ("just work on your research")

But you can also easily be not-strategic-enough! You see people on both sides of the Laffer peak

12. Sometimes I think of academia as a royal court:

- clear hierarchy

- reputation/status are omnipresent

- *everyone knows everyone* (not really, but it can feel like it), literal *names* matter a lot

- obviously the exclusivity

- ceremony, patronage, etiquette, court intrigue..

^"being like a royal court" has both negative but definitely also positive aspects, to be clear!

cf

Academia has an "honor" culture similar to US South

— Arpit Gupta (@arpitrage) July 9, 2019

In the South, politeness rested on undercurrent of violence against threats to honor

Academia: delicate balance of power from implicit threats of negative referee reports, discussions, tenure letters etcsustains niceness

13. Something that took me longer to learn than I'd like to admit --

Papers are mini textbooks. It's not about having "one big insight" (maybe it used to be). It's about fleshing out *everything* related to your topic (within some bounds)

14. On fields. We're still living in the aftermath of the credibility revolution

- We're still picking the low-hanging empirical fruit made available thanks to these tools; and status in the profession is allocated accordingly

plausibly this is efficient ¯\_(ツ)_/¯

15. On conferences. Conferences (for me at least) have to be exercises in ruthless energy management

16. Relatedly, for doing theory research: it was weird switching from my entire previous life where "time" was the biggest constraint to "energy" being the biggest constraint

(though for most empirical/coding work it's still "time" in my experience)

17. In education, *teaching effort* is that which is scarce

As a student, I would hear education policy recommendation X and think it sounds sensible. As a teacher, I hear X and think about "well that has a benefit, ...but it requires costly effort from the teacher"

18. (A few) things I still want to understand:

(i) The division of labor in different kinds of academic teams. Team production functions vary a lot?

(ii) The life cycle of the academic researcher. How sharply do goals change over time? How much of that is constrained vs. not?

Joint with Trevor Chow and J. Zachary Mazlish. Also posted at the EA Forum. November 2023 update: This post is now an academic paper, here, with some different emphasis and some new empirical evidence

In this post, we point out that short AI timelines would cause real interest rates to be high, and would do so under expectations of either unaligned or aligned AI. However, 30- to 50-year real interest rates are low. We argue that this suggests one of two possibilities:

In the rest of this post we flesh out this argument.

An order-of-magnitude estimate is that, if markets are getting this wrong, then there is easily $1 trillion lying on the table in the US treasury bond market alone – setting aside the enormous implications for every other asset class.

Interpretation. We view our argument as the best existing outside view evidence on AI timelines – but also as only one model among a mixture of models that you should consider when thinking about AI timelines. The logic here is a simple implication of a few basic concepts in orthodox economic theory and some supporting empirical evidence, which is important because the unprecedented nature of transformative AI makes “reference class”-based outside views difficult to construct. This outside view approach contrasts with, and complements, an inside view approach, which attempts to build a detailed structural model of the world to forecast timelines (e.g. Cotra 2020; see also Nostalgebraist 2022).

Outline. If you want a short version of the argument, sections I and II (700 words) are the heart of the post. Additionally, the section titles are themselves summaries, and we use text formatting to highlight key ideas.

Real interest rates reflect, among other things:

This claim is compactly summarized in the “Ramsey rule” (and the only math that we will introduce in this post), a version of the “Euler equation” that in one form or another lies at the heart of every theory and model of dynamic macroeconomics:

r = ρ + σg

where:

(Internalizing the meaning of these Greek letters is wholly not necessary.)

While more elaborate macroeconomic theories vary this equation in interesting and important ways, it is common to all of these theories that the real interest rate is higher when either (1) the time discount rate is high or (2) future growth is expected to be high.

We now provide some intuition for these claims.

Time discounting and mortality risk. Time discounting refers to how much people discount the future relative to the present, which captures both (i) intrinsic preference for the present relative to the future and (ii) the probability of death.

The intuition for why the probability of death raises the real rate is the following. Suppose we expect with high probability that humanity will go extinct next year. Then there is no reason to save today: no one will be around to use the savings. This pushes up the real interest rate, since there is less money available for lending.

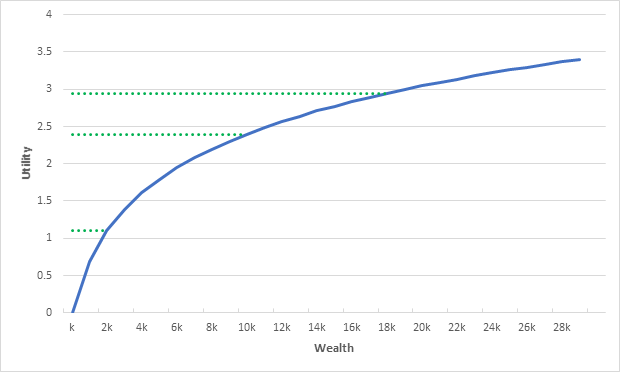

Economic growth. To understand why higher economic growth raises the real interest rate, the intuition is similar. If we expect to be wildly rich next year, then there is also no reason to save today: we are going to be tremendously rich, so we might as well use our money today while we’re still comparatively poor.

(For the formal math of the Euler equation, Baker, Delong, and Krugman 2005 is a useful reference. The core intuition is that either mortality risk or the prospect of utopian abundance reduces the supply of savings, due to consumption smoothing logic, which pushes up real interest rates.)

Transformative AI and real rates. Transformative AI would either raise the risk of extinction (if unaligned), or raise economic growth rates (if aligned).

Therefore, based on the economic logic above, the prospect of transformative AI – unaligned or aligned – will result in high real interest rates. This is the key claim of this post.

As an example in the aligned case, Davidson (2021) usefully defines AI-induced “explosive growth” as an increase in growth rates to at least 30% annually. Under a baseline calibration where σ=1 and ρ=0.01, and importantly assuming growth rates are known with certainty, the Euler equation implies that moving from 2% growth to 30% growth would raise real rates from 3% to 31%!

For comparison, real rates in the data we discuss below have never gone above 5%.

(In using terms like “transformative AI” or “advanced AI”, we refer to the cluster of concepts discussed in Yudkowsky 2008, Bostrom 2014, Cotra 2020, Carlsmith 2021, Davidson 2021, Karnofsky 2022, and related literature: AI technology that precipitates a transition comparable to the agricultural or industrial revolutions.)

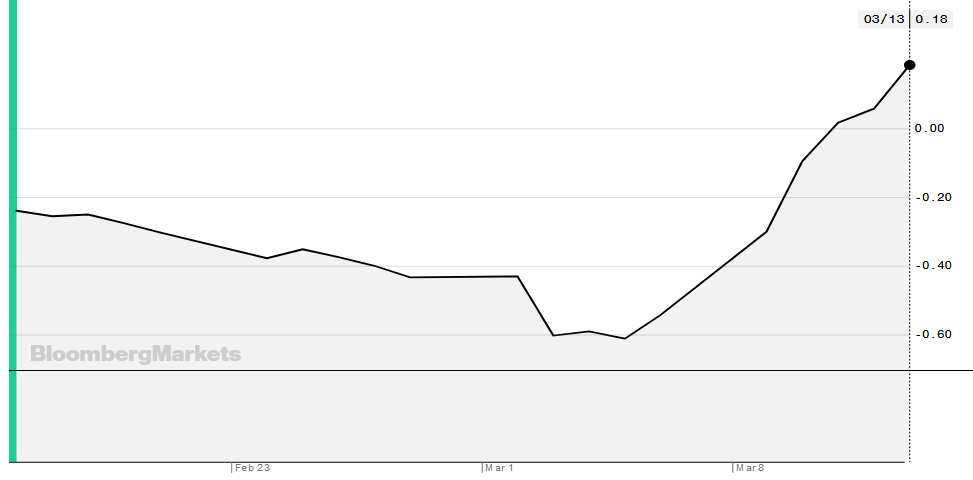

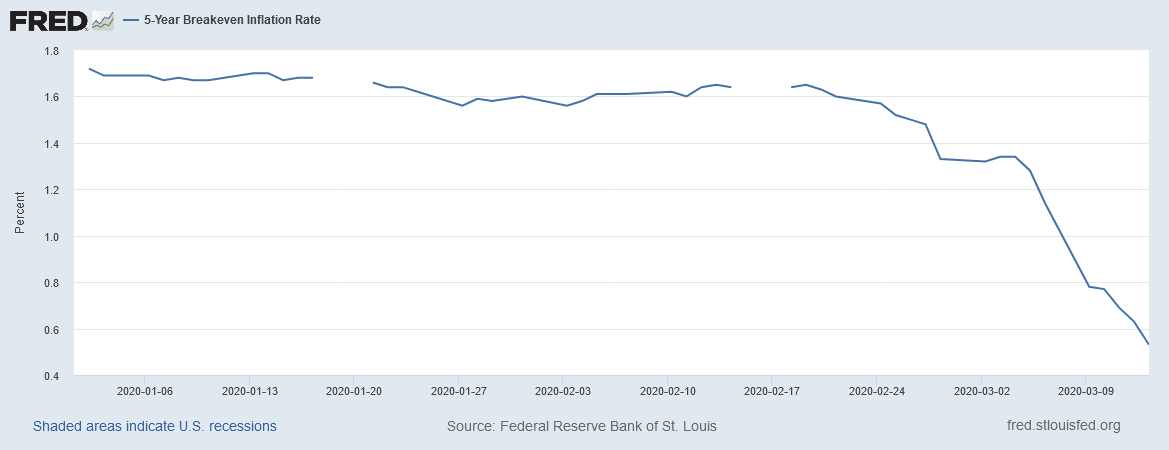

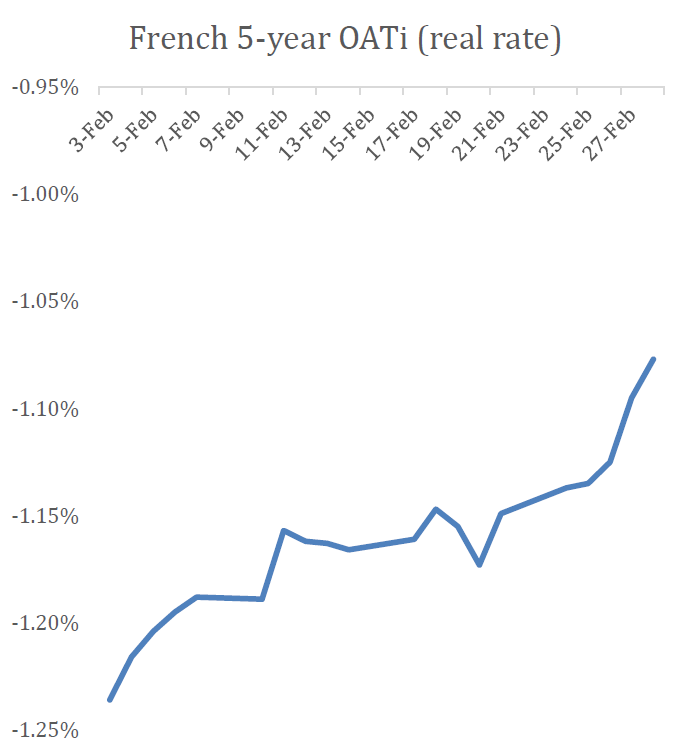

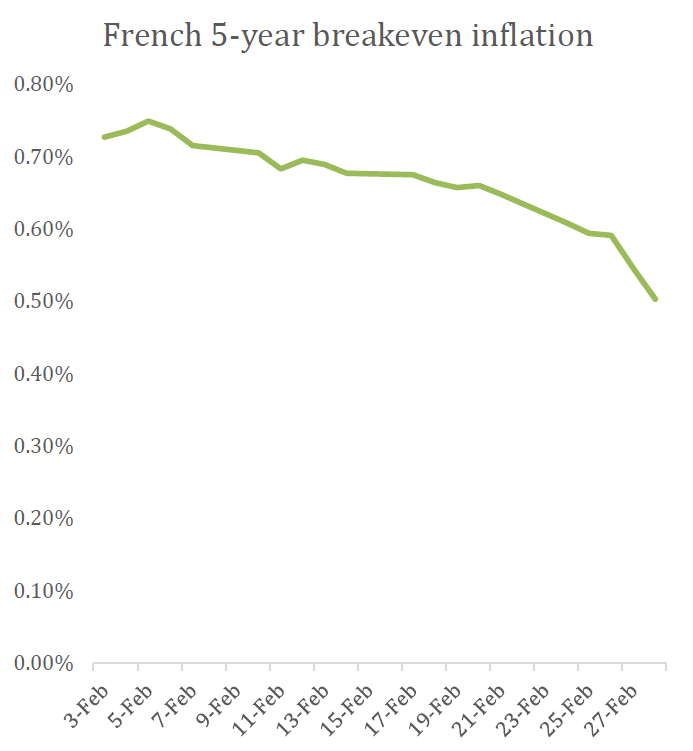

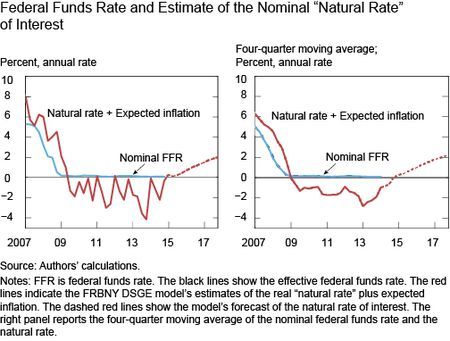

The US 30-year real interest rate ended 2022 at 1.6%. Over the full year it averaged 0.7%, and as recently as March was below zero. Looking at a shorter time horizon, the US 10-year real interest rate is 1.6%, and similarly was below negative one percent as recently as March.

(Data sources used here are explained in section V.)

The UK in autumn 2021 sold a 50-year real bond with a -2.4% rate at the time. Real rates on analogous bonds in other developed countries in recent years have been similarly low/negative for the longest horizons available. Austria has a 100-year nominal bond – being nominal should make its rate higher due to expected inflation – with yields less than 3%.

Thus the conclusion previewed above: financial markets, as evidenced by real interest rates, are not expecting a high probability of either AI-induced growth acceleration or elevated existential risk, on at least a 30-50 year time horizon.

In this section we briefly consider some potentially important complications.

Uncertainty. The Euler equation and the intuition described above assumed certainty about AI timelines, but taking into account uncertainty does not change the core logic. With uncertainty about the future economic growth rate, then the real interest rate reflects the expected future economic growth rate, where importantly the expectation is taken over the risk-neutral measure: in brief, probabilities of different states are reweighted by their marginal utility. We return to this in our quantitative model below.

Takeoff speeds. Nothing in the logic above relating growth to real rates depends on slow vs. fast takeoff speed; the argument can be reread under either assumption and nothing changes. Likewise, when considering the case of aligned AI, rates should be elevated whether economic growth starts to rise more rapidly before advanced AI is developed or only does so afterwards. What matters is that GDP – or really, consumption – ends up high within the time horizon under consideration. As long as future consumption will be high within the time horizon, then there is less motive to save today (“consumption smoothing”), pushing up the real rate.

Inequality. The logic above assumed that the development of transformative AI affects everyone equally. This is a reasonable assumption in the case of unaligned AI, where it is thought that all of humanity will be evaporated. However, when considering aligned AI, it may be thought that only some will benefit, and therefore real interest rates will not move much: if only an elite Silicon Valley minority is expected to have utopian wealth next year, then everyone else may very well still choose to save today.

It is indeed the case that inequality in expected gains from transformative AI would dampen the impact on real rates, but this argument should not be overrated. First, asset prices can be crudely thought of as reflecting a wealth-weighted average across investors. Even if only an elite minority becomes fabulously wealthy, it is their desire for consumption smoothing which will end up dominating the determination of the real rate. Second, truly transformative AI leading to 30%+ economy-wide growth (“Moore’s law for everything”) would not be possible without having economy-wide benefits.

Stocks. One naive objection to the argument here would be the claim that real interest rates sound like an odd, arbitrary asset price to consider; certainly stock prices are the asset price that receive the most media attention.

In appendix 1, we explain that the level of the real interest rate affects every asset price: stocks for instance reflect the present discounted value of future dividends; and real interest rates determine the discount rate used to discount those future dividends. Thus, if real interest rates are ‘wrong’, every asset price is wrong. If real interest rates are wrong, a lot of money is on the table, a point to which we return in section X.

We also argue that stock prices in particular are not a useful indicator of market expectations of AI timelines. Above all, high stock prices of chipmakers or companies like Alphabet (parent of DeepMind) could only reflect expectations for aligned AI and could not be informative of the risk of unaligned AI. Additionally, as we explain further in the appendix, aligned AI could even lower equity prices, by pushing up discount rates.

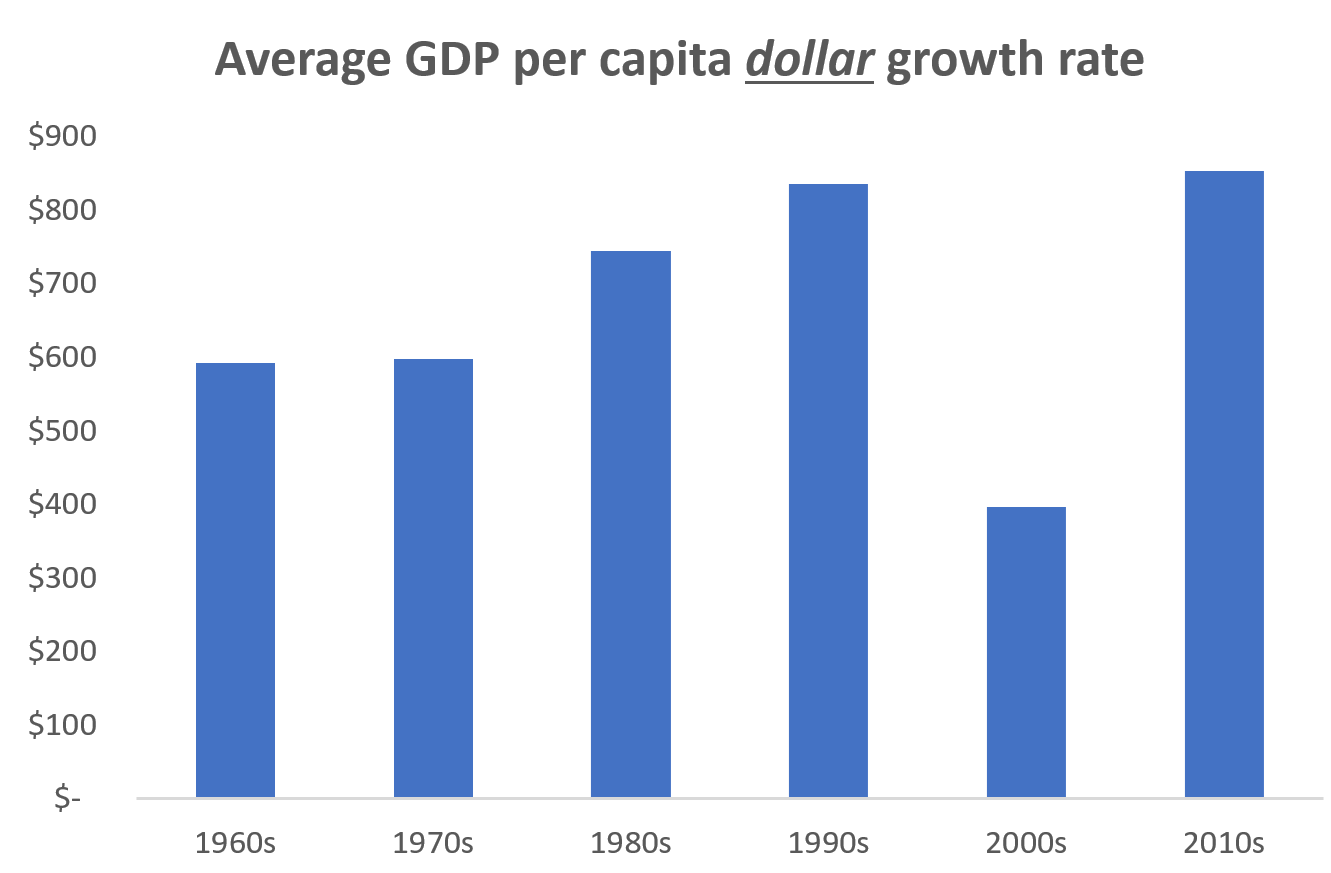

In section I, we gave theoretical intuition for why higher expected growth or higher existential risk would result in higher interest rates: expectations for such high growth or mortality risk would lead people to want to save less and borrow more today. In this section and the next two, we showcase some simple empirical evidence that the predicted relationships hold in the available data.

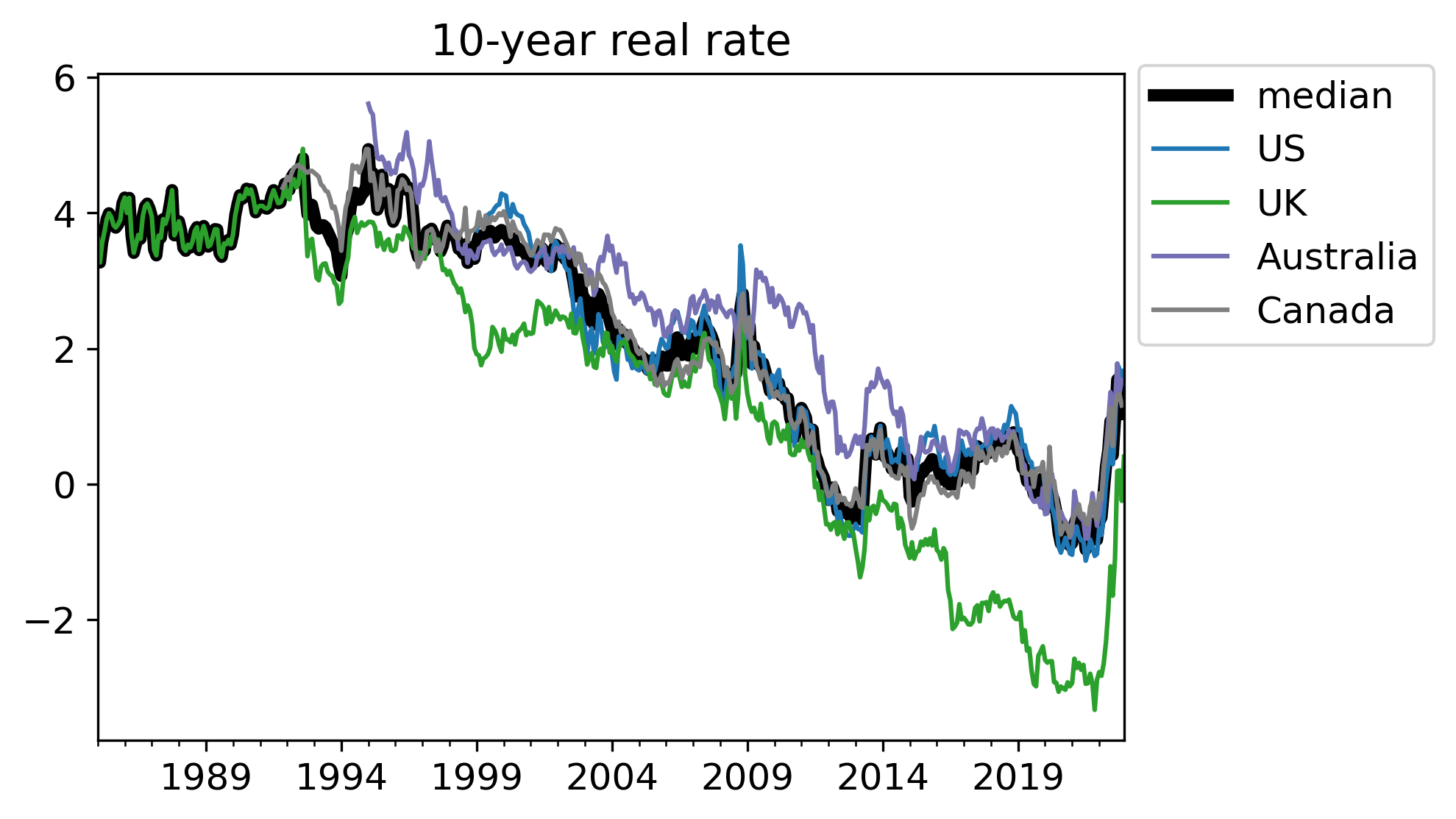

Measuring real rates. To compare historical real interest rates to historical growth, we need to measure real interest rates.

Most bonds historically have been nominal, where the yield is not adjusted for changes in inflation. Therefore, the vast majority of research studying real interest rates starts with nominal interest rates, attempts to construct an estimate of expected inflation using some statistical model, and then subtracts this estimate of expected inflation from the nominal rate to get an estimated real interest rate. However, constructing measures of inflation expectations is extremely difficult, and as a result most papers in this literature are not very informative.

Additionally, most bonds historically have had some risk of default. Adjusting for this default premium is also extremely difficult, which in particular complicates analysis of long-run interest rate trends.

The difficulty in measuring real rates is one of the main causes, in our view, of Tyler Cowen’s Third Law: “all propositions about real interest rates are wrong”. Throughout this piece, we are badly violating this (Godelian) Third Law. In appendix 2, we expand on our argument that the source of Tyler’s Third Law is measurement issues in the extant literature, together with some separate, frequent conceptual errors.

Our approach. We take a more direct approach.

Real rates. For our primary analysis, we instead use market real interest rates from inflation-linked bonds. Because we use interest rates directly from inflation-linked bonds – instead of constructing shoddy estimates of inflation expectations to use with nominal interest rates – this approach avoids the measurement issue just discussed (and, we argue, allows us to escape Cowen’s Third Law).

To our knowledge, prior literature has not used real rates from inflation-linked bonds only because these bonds are comparatively new. Using inflation-linked bonds confines our sample to the last ∼20 years in the US, the last ∼30 in the UK/Australia/Canada. Before that, inflation-linked bonds didn’t exist. Other countries have data for even fewer years and less liquid bond markets.

(The yields on inflation-linked bonds are not perfect measures of real rates, because of risk premia, liquidity issues, and some subtle issues with the way these securities are structured. You can build a model and attempt to strip out these issues; here, we will just use the raw rates. If you prefer to think of these empirics as “are inflation-linked bond yields predictive of future real growth” rather than “are real rates predictive of future real growth”, that interpretation is still sufficient for the logic of this post.)

Nominal rates. Because there are only 20 or 30 years of data on real interest rates from inflation-linked bonds, we supplement our data by also considering unadjusted nominal interest rates. Nominal interest rates reflect real interest rates plus inflation expectations, so it is not appropriate to compare nominal interest rates to real GDP growth.

Instead, analogously to comparing real interest rates to real GDP growth, we compare nominal interest rates to nominal GDP growth. The latter is not an ideal comparison under economic theory – and inflation variability could swamp real growth variability – but we argue that this approach is simple and transparent.

Looking at nominal rates allows us to have a very large sample of countries for many decades: we use OECD data on nominal rates available for up to 70 years across 39 countries.

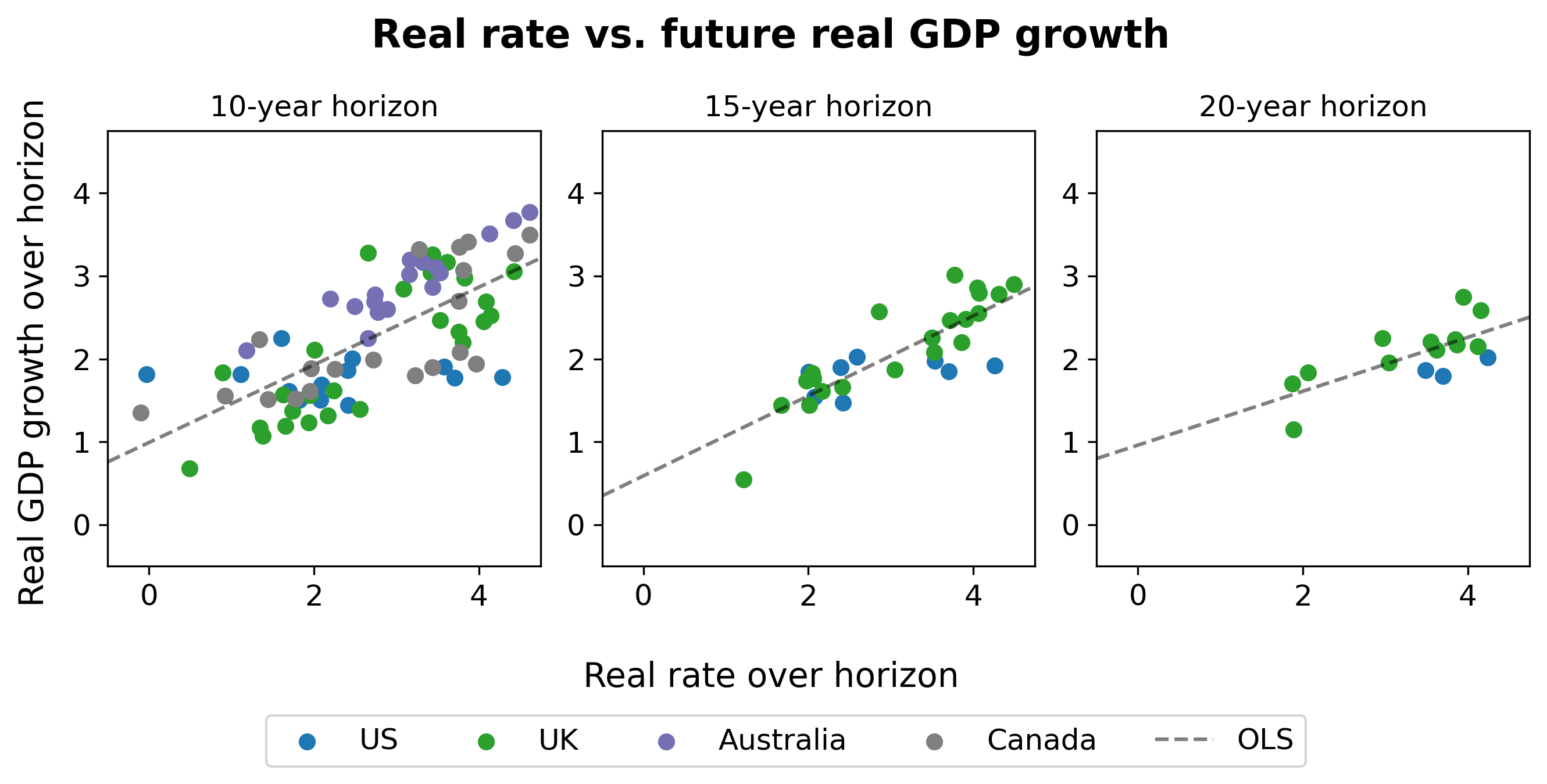

The goal of this section is to show that real interest rates have correlated with future real economic growth, and secondarily, that nominal interest rates have correlated with future nominal economic growth. We also briefly discuss the state of empirical evidence on the correlation between real rates and existential risk.

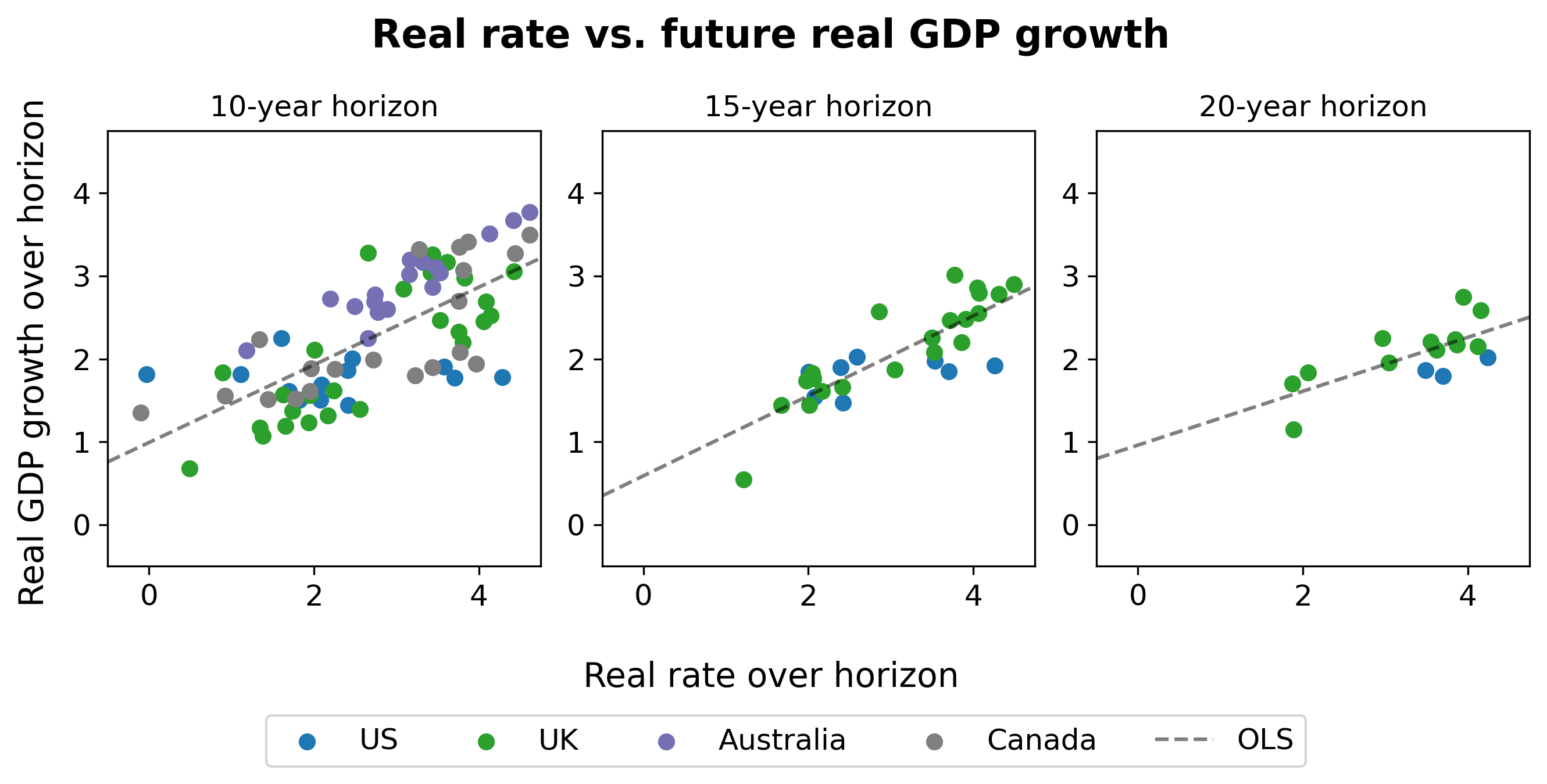

Real rates vs. real growth. A first cut at the data suggests that, indeed, higher real rates today predict higher real growth in the future:

To see how to read these graphs, take the left-most graph (“10-year horizon”) for example. The x-axis shows the level of the real interest rate, as reflected on 10-year inflation linked bonds. The y-axis shows average real GDP growth over the following 10 years.

The middle and right hand graphs show the same, at the 15-year and 20-year horizons. The scatter plot shows all available data for the US (since 1999), the UK (since 1985), Australia (since 1995), and Canada (since 1991). (Data for Australia and Canada is only available at the 10-year horizon, and comes from Augur Labs.)

Eyeballing the figure, there appears to be a strong relationship between real interest rates today and future economic growth over the next 10-20 years.

To our knowledge, this simple stylized fact is novel.

Caveats. “Eyeballing it” is not a formal econometric method; but, this is a blog post not a journal article (TIABPNAJA). We do not perform any formal statistical tests here, but we do want to acknowledge some important statistical points and other caveats.

First, the data points in the scatter plot are not statistically independent: real rates and growth are both persistent variables; the data points contain overlapping periods; and growth rates in these four countries are correlated. These issues are evident even from eyeballing the time series. Second, of course this relationship is not causally identified: we do not have exogenous variation in real growth rates. (If you have ideas for identifying the causal effect of higher real growth expectations on real rates, we would love to discuss with you.)

Relatedly, many other things are changing in the world which are likely to affect real rates. Population growth is slowing, retirement is lengthening, the population is aging. But under AI-driven “explosive” growth – again say 30%+ annual growth, following the excellent analysis of Davidson (2021) – then, we might reasonably expect that this massive of an increase in the growth rate would drown out the impact of any other factors.

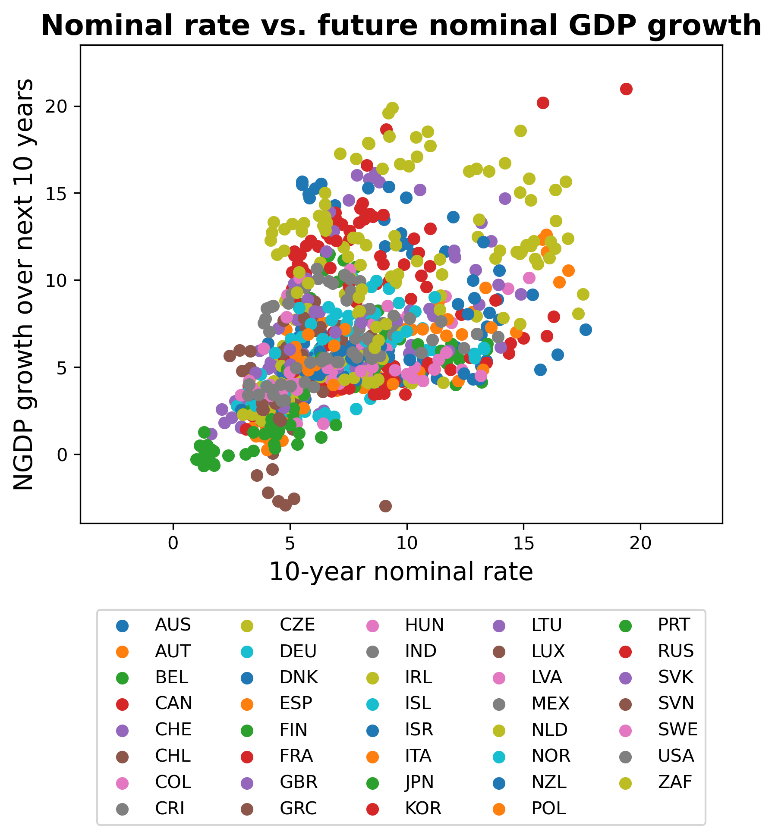

Nominal rates vs. nominal growth. Turning now to evidence from nominal interest rates, recall that the usefulness of this exercise is that while there only exists 20 or 30 years of data on real interest rates for two countries, there is much more data on nominal interest rates.

We simply take all available data on 10-year nominal rates from the set of 39 OECD countries since 1954. The following scatterplot compares the 10-year nominal interest versus nominal GDP growth over the succeeding ten years by country:

Again, there is a strong positive – if certainly not perfect – relationship. (For example, the outlier brown dots at the bottom of the graph are Greece, whose high interest rates despite negative NGDP growth reflect high default risk during an economic depression.)

The same set of nontrivial caveats apply to this analysis as above.

We consider this data from nominal rates to be significantly weaker evidence than the evidence from real rates, but corroboration nonetheless.

Backing out market-implied timelines. Taking the univariate pooled OLS results from the real rate data far too seriously, the fact that the 10-year real rate in the US ended 2022 at 1.6% would predict average annual real GDP growth of 2.6% over the next 10 years in the US; the analogous interest rate of -0.2% in the UK would predict 0.7% annual growth over the next 10 years in the UK. Such growth rates, clearly, are not compatible with the arrival of transformative aligned AI within this horizon.

We have argued that in the theory, real rates should be higher in the face of high economic growth or high mortality risk; empirically, so far, we have only shown a relationship between real rates and growth, but not between real rates and mortality.

Showing that real rates accurately reflect changes in existential risk is very difficult, because there is no word-of-god measurement of how existential risk has evolved over time.

We would be very interested in pursuing new empirical research examining “asset pricing under existential risk”. In appendix 3, we perform a scorched-earth literature review and find essentially zero existing empirical evidence on real rates and existential risks.

Disaster risk. In particular, the extant literature does not study existential risks but instead “merely” disaster risks, under which real assets are devastated but humanity is not exterminated. Disaster risks do not necessarily raise real rates – indeed, such risks are thought to lower real rates due to precautionary savings. That notwithstanding, some highlights of the appendix review include a small set of papers finding that individuals with a higher perceived risk of nuclear conflict during the Cold War saved less, as well as a paper noting that equities which were headquartered in cities more likely to be targeted by Soviet missiles did worse during the Cuban missile crisis (see also). Our assessment is that these and the other available papers on disaster risks discussed in the appendix have severe limitations for the purposes here.

Individual mortality risk. We judge that the best evidence on this topic comes instead from examining the relationship between individual mortality risk and savings/investment behavior. The logic we provided was that if humanity will be extinct next year, then there is no reason to save, pushing up the real rate. Similar logic says that at the individual level, a higher risk of death for any reason should lead to lower savings and less investment in human capital. Examples of lower savings at the individual level need not raise interest rates at the economy-wide level, but do provide evidence for the mechanism whereby extinction risk should lead to lower saving and thus higher interest rates.

One example comes from Malawi, where the provision of a new AIDS therapy caused a significant increase in life expectancy. Using spatial and temporal variation in where and when these therapeutics were rolled out, it was found that increased life expectancy results in more savings and more human capital investment in the form of education spending. Another experiment in Malawi provided information to correct pessimistic priors about life expectancy, and found that higher life expectancy directly caused more investment in agriculture and livestock.

A third example comes from testing for Huntington’s disease, a disease which causes a meaningful drop in life expectancy to around 60 years. Using variation in when people are diagnosed with Huntington’s, it has been found that those who learn they carry the gene for Huntington’s earlier are 30 percentage points less likely to finish college, which is a significant fall in their human capital investment.

Studying the effect on savings and real rates from increased life expectancy at the population level is potentially intractable, but would be interesting to consider further. Again, in our assessment, the best empirical evidence available right now comes from the research on individual “existential” risks and suggests that real rates should increase with existential risk.

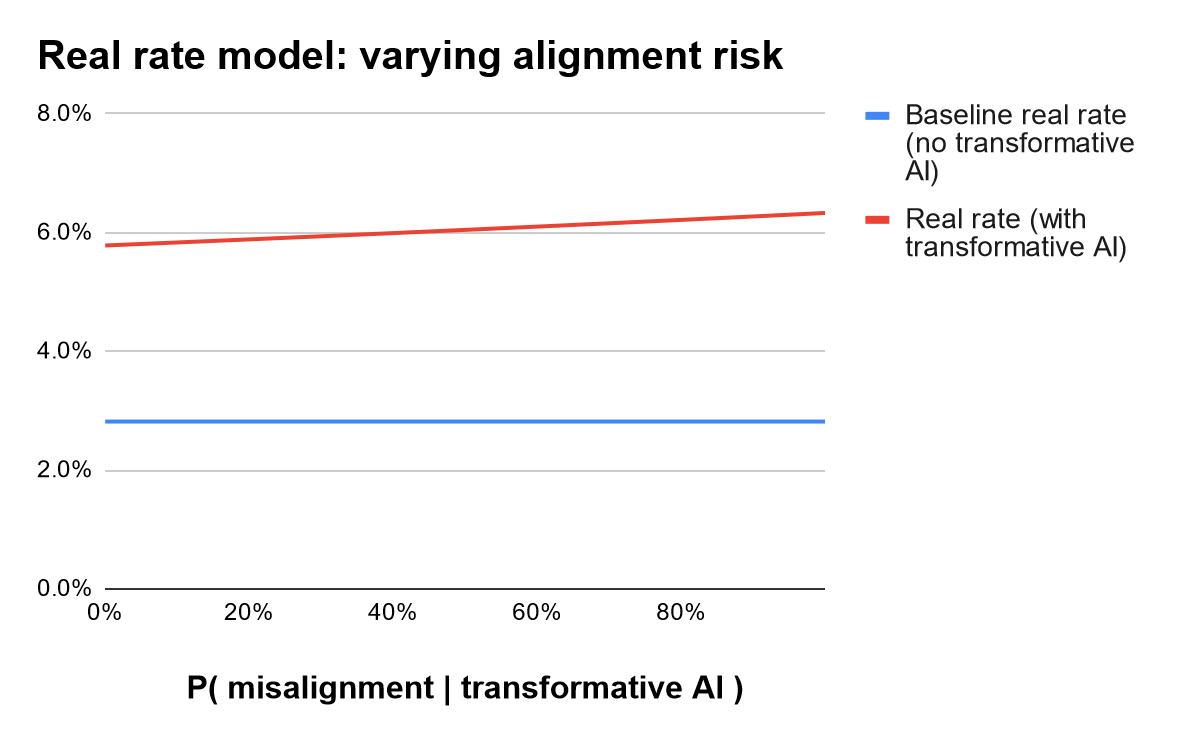

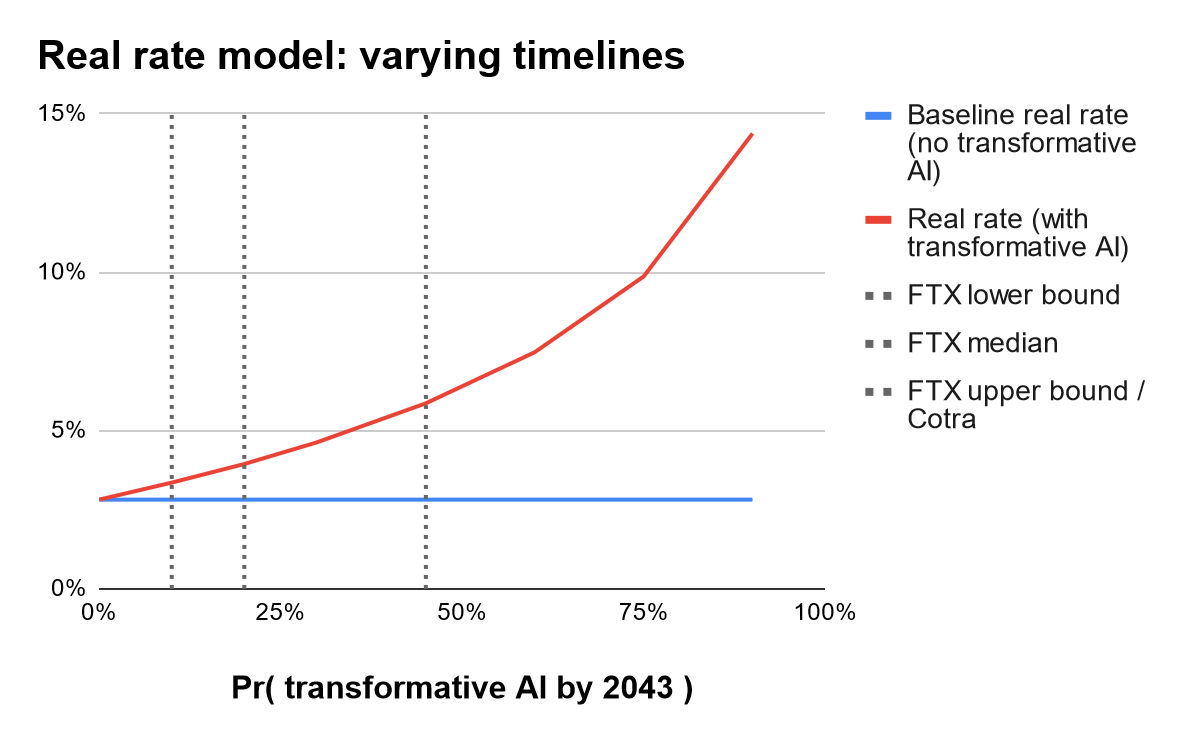

Section VI used historical data to go from the current real rate to a very crude market-implied forecast of growth rates; in this section, we instead use a model to go from existing forecasts of AI timelines to timeline-implied real rates. We aim to show that under short AI timelines, real interest rates would be unrealistically elevated.

This is a useful exercise for three reasons. First, the historical data is only able to speak to growth forecasts, and therefore only able to provide a forecast under the possibly incorrect assumption of aligned AI. Second, the empirical forecast assumes a linear relationship between the real rate and growth, which may not be reasonable for a massive change caused by transformative AI. Third and quite important, the historical data cannot transparently tell us anything about uncertainty and the market’s beliefs about the full probability distribution of AI timelines.

We use the canonical (and nonlinear) version of the Euler equation – the model discussed in section I – but now allow for uncertainty on both how soon transformative AI will be developed and whether or not it will be aligned. The model takes as its key inputs (1) a probability of transformative AI each year, and (2) a probability that such technology is aligned.

The model is a simple application of the stochastic Euler equation under an isoelastic utility function. We use the following as a baseline, before considering alternative probabilities:

Thus, to summarize: by default, GDP grows at 1.8% per year. Every year, there is some probability (based on Cotra) that transformative AI is developed. If it is developed, there is a 15% probability the world ends, and an 85% chance GDP growth jumps to 30% per year.

We have built a spreadsheet here that allows you to tinker with the numbers yourself, such as adjusting the growth rate under aligned AI, to see what your timelines and probability of alignment would imply for the real interest rate. (It also contains the full Euler equation formula generating the results, for those who want the mathematical details.) We first estimate real rates under the baseline calibration above, before considering variations in the critical inputs.

Baseline results. The model predicts that under zero probability of transformative AI, the real rate at any horizon would be 2.8%. In comparison, under the baseline calibration just described based on Cotra timelines, the real rate at a 30-year horizon would be pushed up to 5.9% – roughly three percentage points higher.

For comparison, the 30-year real rate in the US is currently 1.6%.

While the simple Euler equation somewhat overpredicts the level of the real interest rate even under zero probability of transformative AI – the 2.8% in the model versus the 1.6% in the data – this overprediction is explainable by the radical simplicity of the model that we use and is a known issue in the literature. Adding other factors (e.g. precautionary savings) to the model would lower the level. Changing the level does not change its directional predictions, which help quantitatively explain the fall in real rates over the past ∼30 years.

Therefore, what is most informative is the three percentage point difference between the real rate under Cotra timelines (5.9%) versus under no prospect of transformative AI (2.8%): Cotra timelines imply real interest rates substantially higher than their current levels.

Now, from this baseline estimate, we can also consider varying the key inputs.

Varying assumptions on P(misaligned|AGI). First consider changing the assumption that advanced AI is 15% likely to be unaligned (conditional on the development of AGI). Varying this parameter does not have a large impact: moving from 0% to 100% probability of misalignment raises the model’s predicted real rate from 5.8% only to 6.3%.

Varying assumptions on timelines. Second, consider making timelines shorter or longer. In particular, consider varying the probability of development by 2043, which we use as a benchmark per the FTX Future Fund.

We scale the Cotra timelines up and down to vary the probability of development by 2043. (Specifically: we target a specific cumulative probability of development by 2043; and, following Cotra, if the annual probability up until 2030 is x, then it is 1.5x in the subsequent seven years up through 2036, and it is 2x in the remaining years of the 30-year window.)

As the next figure shows and as one might expect, shorter AI timelines have a very large impact on the model’s estimate for the real rate.

These results strongly suggest that any timeline shorter than or equal to the Cotra timeline is not being expected by financial markets.

While it is not possible to back out exact numbers for the market’s implicit forecast for AI timelines, it is reasonable to say that the market is decisively rejecting – i.e., putting very low probability on – the development of transformative AI in the very near term, say within the next ten years.

Consider the following examples of extremely short timelines:

Real rate movements of these magnitudes are wildly counterfactual. As previously noted, real rates in the data used above have never gone above even 5%.

Stagnation. As a robustness check, in the configurable spreadsheet we allow you to place some yearly probability on the economy stagnating and growing at 0% per year from thereon. Even with a 20% chance of stagnation by 2053 (higher than realistic), under Cotra timelines, the model generates a 2.1% increase in 30-year rates.

Recent market movements. Real rates have increased around two percentage points since the start of 2022, with the 30-year real rate moving from -0.4% to 1.6%, approximately the pre-covid level. This is a large enough move to merit discussion. While this rise in long-term real rates could reflect changing market expectations for timelines, it seems much more plausible that high inflation, the Russia-Ukraine war, and monetary policy tightening have together worked to drive up short-term real rates and the risk premium on long-term real rates.

Should we update on the fact that markets are not expecting very short timelines?

Probably!

As a prior, we think that market efficiency is reasonable. We do not try to provide a full defense of the efficient markets hypothesis (EMH) in this piece given that it has been debated ad nauseum elsewhere, but here is a scaffolding of what such an argument would look like.

Loosely, the EMH says that the current price of any security incorporates all public information about it, and as such, you should not expect to systematically make money by trading securities.

This is simply a no-arbitrage condition, and certainly no more radical than supply and demand: if something is over- or under-priced, you’ll take action based on that belief until you no longer believe it. In other words, you’ll buy and sell it until you think the price is right. Otherwise, there would be an unexploited opportunity for profit that was being left on the table, and there are no free lunches when the market is in equilibrium.

As a corollary, the current price of a security should be the best available risk-adjusted predictor of its future price. Notice we didn’t say that the price is equal to the “correct” fundamental value. In fact, the current price is almost certainly wrong. What we did say is that it is the best guess, i.e. no one knows if it should be higher or lower.

Testing this hypothesis is difficult, in the same way that testing any equilibrium condition is difficult. Not only is the equilibrium always changing, there is also a joint hypothesis problem which Fama (1970) outlined: comparing actual asset prices to “correct” theoretical asset prices means you are simultaneously testing whatever asset pricing model you choose, alongside the EMH.

In this sense, it makes no sense to talk about “testing” the EMH. Rather, the question is how quickly prices converge to the limit of market efficiency. In other words, how fast is information diffusion? Our position is that for most things, this is pretty fast!

Here are a few heuristics that support our position:

Remember: if real interest rates are wrong, all financial assets are mispriced. If real interest rates “should” rise three percentage points or more, that is easily hundreds of billions of dollars worth of revaluations. It is unlikely that sharp market participants are leaving billions of dollars on the table.

While our prior in favor of efficiency is fairly strong, the market could be currently failing to anticipate transformative AI, due to various limits to arbitrage.

However, if you do believe the market is currently wrong about the probability of short timelines, then we now argue there are two courses of action you should consider taking:

Under the logic argued above, if you genuinely believe that AI timelines are short, then you should consider putting your money where your mouth is: bet that real rates will rise when the market updates, and potentially earn a lot of money if markets correct. Shorting (or going underweight) government debt is the simplest way of expressing this view.

Indeed, AI safety researcher Paul Christiano has written publicly that he is (or was) short 30-year government bonds.

If short timelines are your true belief in your heart of hearts, and not merely a belief in a belief, then you should seriously consider how much money you could earn here and what you could do with those resources.

Implementing the trade. For retail investors, betting against treasuries via ETFs is perhaps simplest. Such trades can be done easily with retail brokers, like Schwab.

(i) For example, one could simply short the LTPZ ETF, which holds long-term real US government debt (effective duration: 20 years).

(ii) Alternatively, if you would prefer to avoid engaging in shorting yourself, there are ETFs which will do the shorting for you, with nominal bonds: TBF is an ETF which is short 20+ year treasuries (duration: 18 years); TBT is the same, but levered 2x; and TTT is the same, but levered 3x. There are a number of other similar options. Because these ETFs do the shorting for you, all you need to do is purchase shares of the ETFs.

Back of the envelope estimate. A rough estimate of how much money is on the table, just from shorting the US treasury bond market alone, suggests there is easily $1 trillion in value at stake from betting that rates will rise.

Alternatively, returning to the LTPZ ETF with its duration of 20 years, a 3 percentage point rise in rates would cause its value to fall by 60%. Using the 3x levered TTT with duration of 18 years, a 3 percentage point rise in rates would imply a mouth-watering cumulative return of 162%.

While fully fleshing out the trade analysis is beyond the scope of this post, this illustration gives an idea of how large the possibilities are.

The alternative to this order-of-magnitude estimate would be to build a complete bond pricing model to estimate more precisely the expected returns of shorting treasuries. This would need to take into account e.g. the convexity of price changes with interest rate movements, the varied maturities of outstanding bonds, and the different varieties of instruments issued by the Treasury. Further refinements would include trading derivatives (e.g. interest rate futures) instead of shorting bonds directly, for capital efficiency, and using leverage to increase expected returns.

Additionally, the analysis could be extended beyond the US government debt market, again since changes to real interest rates would plausibly impact the price of every asset: stocks, commodities, real estate, everything.

(If you would be interested in fully scoping out possible trades, we would be interested in talking.)

Trade risk and foom risk. We want to be clear that – unless you are risk neutral, or can borrow without penalty at the risk-free rate, or believe in short timelines with 100% probability – then such a bet would not be a free lunch: this is not an “arbitrage” in the technical sense of a risk-free profit. One risk is that the market moves in the other direction in the short term, before correcting, and that you are unable to roll over your position for liquidity reasons.

The other risk that could motivate not making this bet is the risk that the market – for some unspecified reason – never has a chance to correct, because (1) transformative AI ends up unaligned and (2) humanity’s conversion into paperclips occurs overnight. This would prevent the market from ever “waking up”.

However, to be clear, expecting this specific scenario requires both:

You should be sure that your beliefs are actually congruent with these requirements, if you want to refuse to bet that real rates will rise. Additionally, we will see that the second suggestion in this section (“impatient philanthropy”) is not affected by the possibility of foom scenarios.

If prevailing interest rates are lower than your subjective discount rate – which is the case if you think markets are underestimating prospects for transformative AI – then simple cost-benefit analysis says you should save less or even borrow today.

An illustrative example. As an extreme example to illustrate this argument, imagine that you think that there is a 50% chance that humanity will be extinct next year, and otherwise with certainty you will have the same income next year as you do this year. Suppose the market real interest rate is 0%. That means that if you borrow $10 today, then in expectation you only need to pay $5 off, since 50% of the time you expect to be dead.

It is only if the market real rate is 100% – so that your $10 loan requires paying back $20 next year, or exactly $10 in expectation – that you are indifferent about borrowing. If the market real rate is less than 100%, then you want to borrow. If interest rates are “too low” from your perspective, then on the margin this should encourage you to borrow, or at least save less.

Note that this logic is not affected by whether or not the market will “correct” and real rates will rise before everyone dies, unlike the logic above for trading.

Borrowing to fund philanthropy today. While you may want to borrow today simply to fund wild parties, a natural alternative is: borrow today, locking in “too low” interest rates, in order to fund philanthropy today. For example: to fund AI safety work.

We can call this strategy “impatient philanthropy”, in analogy to the concept of “patient philanthropy”.

This is not a call for philanthropists to radically rethink their cost-benefit analyses. Instead, we merely point out: ensure that your financial planning properly accounts for any difference between your discount rate and the market real rate at which you can borrow. You should not be using the market real rate to do your financial planning. If you have a higher effective discount rate due to your AI timelines, that could imply that you should be borrowing today to fund philanthropic work.

Relationship to impatient philanthropy. The logic here has a similar flavor to Phil Trammell’s “patient philanthropy” argument (Trammell 2021) – but with a sign flipped. Longtermist philanthropists with a zero discount rate, who live in a world with a positive real interest rate, should be willing to save all of their resources for a long time to earn that interest, rather than spending those resources today on philanthropic projects. Short-timeliners have a higher discount rate than the market, and therefore should be impatient philanthropists.

(The point here is not an exact analog to Trammell 2021, because the paper there considers strategic game theoretic considerations and also takes the real rate as exogenous; here, the considerations are not strategic and the endogeneity of the real rate is the critical point.)

We do not claim to have special technical insight into forecasting the likely timeline for the development of transformative artificial intelligence: we do not present an inside view on AI timelines.

However, we do think that market efficiency provides a powerful outside view for forecasting AI timelines and for making financial decisions. Based on prevailing real interest rates, the market seems to be strongly rejecting timelines of less than ten years, and does not seem to be placing particularly high odds on the development of transformative AI even 30-50 years from now.

We argue that market efficiency is a reasonable benchmark, and consequently, this forecast serves as a useful prior for AI timelines. If markets are wrong, on the other hand, then there is an enormous amount of money on the table from betting that real interest rates will rise. In either case, this market-based approach offers a useful framework: either for forecasting timelines, or for asset allocation.

Opportunities for future work. We could have put 1000 more hours into the empirical side or the model, but, TIABPNAJA. Future work we would be interested in collaborating on or seeing includes:

Thanks especially to Leopold Aschenbrenner, Nathan Barnard, Jackson Barkstrom, Joel Becker, Daniele Caratelli, James Chartouni, Tamay Besiroglu, Joel Flynn, James Howe, Chris Hyland, Stephen Malina, Peter McLaughlin, Jackson Mejia, Laura Nicolae, Sam Lazarus, Elliot Lehrer, Jett Pettus, Pradyumna Prasad, Tejas Subramaniam, Karthik Tadepalli, Phil Trammell, and participants at ETGP 2022 for very useful conversations on this topic and/or feedback on drafts.

Update: we have now posted a comment summarising our responses to the feedback we have received so far.

OpenAI’s ChatGPT model on what will happen to real rates if transformative AI is developed:

Some framings you can use to interpret this post:

Note: This is an appendix to “AGI and the EMH: markets are not expecting aligned or unaligned AI in the next 30 years”. Joint with Trevor Chow and J. Zachary Mazlish..

One naive objection would be the claim that real interest rates sound like an odd, arbitrary asset price to consider. Certainly, real interest rates are not frequently featured in newspaper headlines – if any interest rates are quoted, it is typically nominal interest rates – and stock prices receive by far the most popular attention.

The importance of real rates. However, even if real interest rates are not often discussed, real interest rates affect every asset price. This is because asset prices always reflect some discounted value of future cash flows: for example, the price of Alphabet stock reflects the present discounted value of future Alphabet dividend payments. These future dividend payments are discounted using a discount rate which is determined by the prevailing real interest rate. Thus the claim that real interest rates affect every asset price.

As a result, if real interest rates are ‘wrong’, every asset price is wrong. If real interest rates are wrong, a lot of money is on the table.

Stocks are hard to interpret. It may nonetheless be tempting to look at stock prices to attempt to interpret how the market is thinking about AI timelines (e.g. Ajeya Cotra; Matthew Barnett; /r/ssc). It may be tempting to consider the high market capitalization of Alphabet as reflecting market expectations for large profits generated by DeepMind’s advancing capabilities, or TSMC’s market cap as reflecting market expectations for the chipmaker to profit from AI progress.

However, extracting AI-related expectations from stock prices is a very challenging exercise – to the point that we believe it is simply futile – for four reasons.

If you want to use market prices to predict AI timelines, using equities is not a great way to do it.

In contrast, real interest rates do not suffer from these problems.

Joint with Trevor Chow and J. Zachary Mazlish

Note: This is an appendix to “AGI and the EMH: markets are not expecting aligned or unaligned AI in the next 30 years”.

Throughout the body of the main post, we are badly violating Tyler Cowen’s Third Law: “all propositions about real interest rates are wrong”.

The origin of this (self-referential) idea is that there are many conflicting claims about real interest rates. One way to see this point is this thread from Jo Michell listing seventy different theories for the determination of real and nominal interest rates.

We do think Tyler’s Third Law is right – economists do not have a sufficiently good understanding of real interest rates – and we speculate that there are three reasons for this poor understanding.

1. Real vs. nominal interest rates. A basic problem is that many casual observers simply conflate nominal interest rates and real interest rates, failing to distinguish them. This muddies many discussions about “interest rates”, since nominal and real rates are driven by different factors.

2. Adjusting for inflation and default risk. Another extremely important part of the problem, discussed at length in section IV of the main post, is that there did not exist a market-based measure of risk-free, real interest rates until the last 2-3 decades, with the advent of inflation-linked bonds and inflation swaps.

Most analyses instead use nominal rates – where in contrast there are centuries of data – and try to construct a measure of expected inflation in order to estimate real interest rates via the Fisher equation (e.g. Lunsford and West 2019). Crucially, the crude attempts to measure expected inflation create extensive distortions in these analyses.

Even more problematically, much of the historical data on nominal interest rates comes from bonds that were not just nominal but also were risky (e.g. Schmelzing 2020): historical sovereigns had high risk of default. Adjusting for default risk is extremely difficult, just like adjusting for inflation expectations, and also creates severe distortions in analyses.

3. Drivers of short-term real rates are different from those for long-term rates. Finally, another important issue in discourse around real interest rates is that the time horizon really matters.

In particular: our best understanding of the macroeconomy predicts that real rates should have very different drivers in the short run versus in the long run.This short run versus long run distinction is blurry and vague, so it is difficult to separate the data to do the two relevant analyses of “what drives short-term real rates” versus “what drives long-term real rates”. Much analysis simply ignores the distinction.

---

Together, one or more of these three issues – the nominal-real distinction; the lack of historical risk-free inflation-linked bonds; and the short- vs. long-run distinction – tangles up most research and popular discourse on real interest rates.

Hence, Tyler’s Third Law: “all propositions about real interest rates are wrong”.

In the main post, we hope that by our use of data from inflation-linked bonds – rather than shoddily constructing pseudo data on inflation expectations, to use with nominal bond data – and being careful to work exclusively with long-run real rates, we have avoided the Third Law.

---

The above figure is from the main post. To see how to read these graphs, take for example the left-most graph (“10-year horizon”) and pick a green dot. The x-axis then shows the level of the real interest rate in the UK, as reflected on 10-year inflation linked bonds, in some given year. The y-axis shows average real GDP growth over the following 10 years from that point. For data discussion and important statistical notes, see the main post.

I have created a new set of questions on the forecasting platform Metaculus to help predict what monetary policy will look like over the next three decades. These questions accompany a “fortified essay” located here which offers context on the importance of these questions, which I expand on below.

You, yes you, can go forecast on these questions right now – or even go submit your own questions if you’re dissatisfied with mine.

My hope is that these forecasts will be, at the least, marginally useful for those thinking about how to design policy – but also useful to researchers (e.g.: me!) in determining which research will be most relevant in coming decades.

Below I give some background on Metaculus for those not already familiar, and I offer some thoughts on my choice of questions and their design.

I. Brief background on Metaculus

The background on Metaculus is that the website allows anyone to register an account and forecast on a huge variety of questions: from will Trump win the 2024 election (27%) to Chinese annexation of Taiwan by 2050 (55%) to nanotech FDA approval by 2031 (62%). Interestingly, the questions need not be binary yes/no and instead can be date-based – e.g. year AGI developed (2045); or nonbinary – e.g. number of nuclear weapons used offensively by 2050 (1.10).

Metaculus is not a prediction market: you do not need to bet real money to participate, and conversely there is no monetary incentive for accuracy.

This is an important shortcoming! THE reason markets are good at aggregating dispersed information and varying beliefs is the possibility of arbitrage. Arbitrage is not possible here.

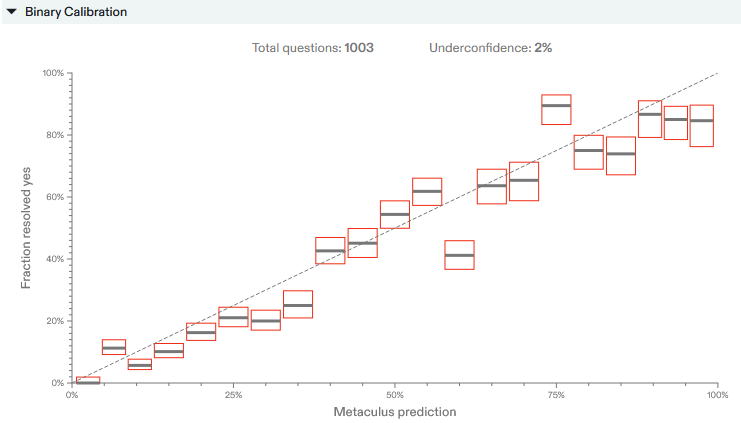

II. Metaculus is surprisingly accurate

Nonetheless, Metaculus has both a surprisingly active userbase and, as far as I can tell, a surprisingly good track record? Their track record page has some summary statistics.

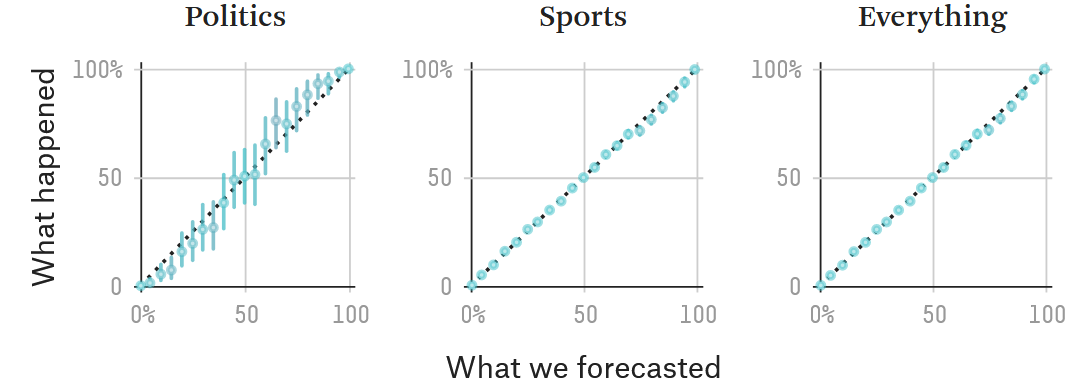

For binary yes/no questions, taking the Metaculus forecast at 25% of the way through the question lifetime, the calibration chart looks like this:

The way to read this chart is that, for questions where Metaculus predicts a (for example) 70% probability of a “YES” outcome, it happens 67.5% of the time on average.

For comparison, here is FiveThirtyEight’s calibration chart:

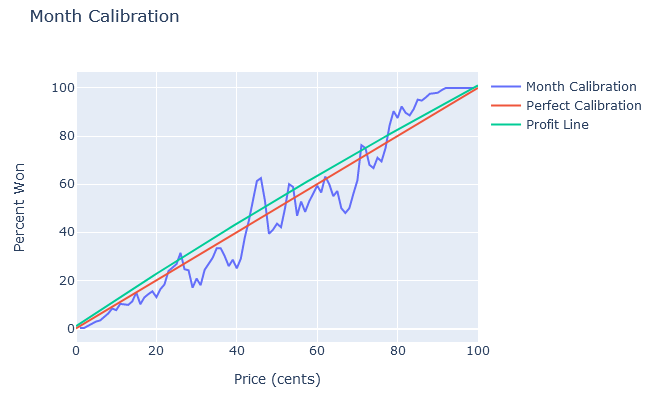

And here is an actual prediction market, PredictIt, using 9 months’ worth of data collected by Jake Koenig on 567 markets:

(See also: Arpit Gupta’s great analysis of prediction markets vs. FiveThirtyEight on 2020 US elections. If you want to be a real nerd about this stuff, Scott Alexander’s “Mantic Monday” posts and Nuño Sempere’s Forecasting Newsletter have good regular discussions of new developments in the space.)

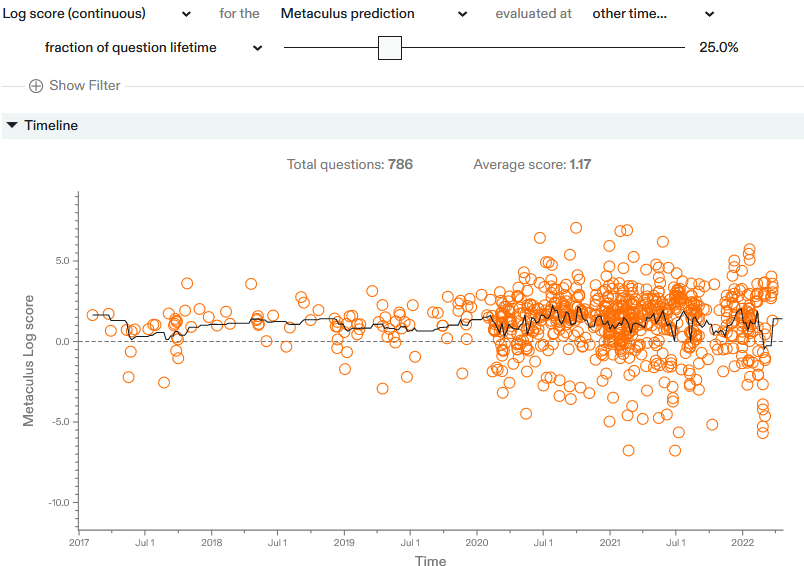

A potentially very important caveat is that these calibration charts only score the accuracy of yes-versus-no types of questions. For date-based questions (e.g. “AGI when?”) or questions with continuous outcomes, scoring accuracy is more complicated. I don’t know of a great way to score, let alone visualize, the accuracy of date-based questions; send suggestions. Metaculus’ track record page offers the log score, which is one particular accuracy statistic, for all questions:

As far as I’m aware, the only way to interpret this is: ‘higher is gooder’. I also do not have any reference forecasters to which this can be compared, unlike for the binary questions above – again, making things hard to interpret. The log score also does not capture all of the information contained in the entirety of the CDF of a forecast; only the forecasted probability at the resolution date.

Last, given that Metaculus launched in 2017, it’s not yet possible to analyze the accuracy of long-run forecasts.

III. Metaculus for forecasting monetary policy design

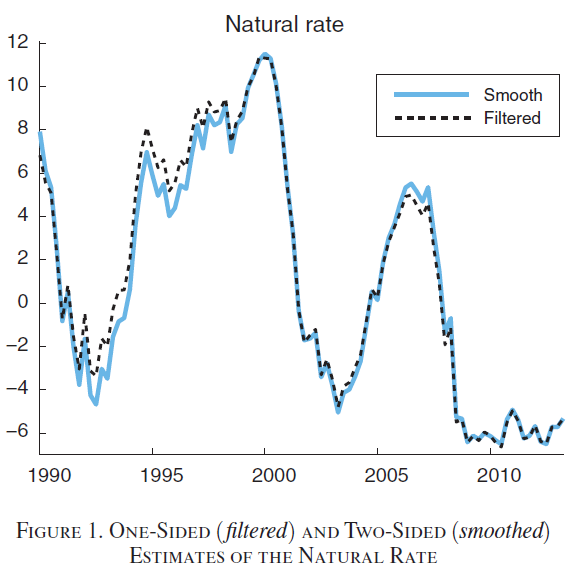

For macroeconomics, we already have some forecasts directly from financial markets for short- or medium-term variables, e.g. predictions for the Fed’s policy interest rate.

I think forecasts for longer-term questions and for questions not available on financial markets could be useful for researchers and practitioners. To make this argument, I’ll walk through the questions I wrote up for Metaculus, listed in the intro above.

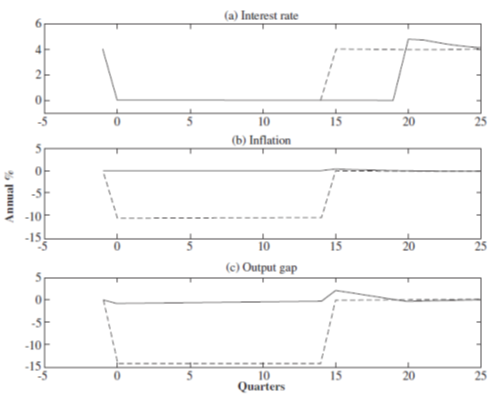

The first set of questions is about the zero lower bound and negative interest rates: when is the next time the US will get stuck at the ZLB; how many times between now and 2050 will we end up stuck there; and will the Fed push interest rates below zero if so.

This is of extreme practical importance. The ZLB is conventionally believed to be an important constraint on monetary policy and consequently a justification for fiscal intervention (“stimmies”). If we will hit the ZLB frequently in coming decades, then it is even more important than previously considered to (1) develop our understanding of optimal policy at the ZLB, and (2) analyze more out-of-the-Overton-window policy choices, like using negative rates.

A policy even further out of the Overton window would be the abolition of cash, which is another topic I solicit forecasts on for the US as well as for China (where likely this will occur sooner). If cash is abolished, then the ZLB ceases to be a constraint. (This to me implies pretty strongly that we ought to have abolished cash, yesterday.)

Cash abolition would be useful to predict not just so that I can think about how much time to spend analyzing such a policy; but also because abolishing cash would mean that studying “optimal policy constrained by the ZLB” would be less important – there would be no ZLB to worry about!

Finally, I asked about if the Fed will switch from its current practice of focusing on stabilizing inflation (“inflation targeting”/“flexible average inflation targeting”) to nominal GDP or nominal wage targeting. This is a topic especially close to my own research.

IV. Questions I did not ask

There are a lot of other questions, or variations on the above questions, that I could have asked but did not.

Expanding my questions to other countries and regions is one obvious possibility. As just one example, it would also be useful to have a forecast for when cash in the eurozone might be abolished. The US-centrism of my questions pains me, but I didn’t want to spam the Metaculus platform with small variations on questions. You should go create these questions though 😊.

Another possible set of questions would have had conditional forecasts. “Will the US ever implement negative rates”; “conditional on the US ever implementing negative rates, when will it first do so”. This would be useful because the questions I created have to smush together these two questions. For example: if Metaculus forecasts 2049 for the expected date of cash abolition, does that mean forecasters have a high probability on cash being abolished, but not until the late 2040s; or that they expect it may be abolished in the next decade, but otherwise will never be abolished? It’s hard to disentangle when there’s only one question, although forecasters do provide their full CDFs.

A final set of possible questions that I considered were too subjective for the Metaculus platform: for example, “Will the ECB ever adopt a form of level targeting?” The resolution criteria for this question were just too hard to specify precisely. (As an example of the difficulty: does the Fed’s new policy of “flexible average inflation targeting” count as level targeting?) Perhaps I will post these more subjective questions on Manifold Markets, a new Metaculus competitor which allows for more subjectivity (which, of course, comes at some cost).

Thanks to Christian Williams and Alyssa Stevens from the team at Metaculus for support, and to Eric Neyman for useful discussion on scoring forecasts.

I want to argue that Newcomb’s problem does not reveal any deep flaw in standard decision theory. There is no need to develop new decision theories to understand the problem.

I’ll explain Newcomb’s problem and expand on these points below, but here’s the punchline up front.

TLDR:

I emphasize that the textbook version of expected utility theory lets us see all this! There’s no need to develop new decision theories. Time consistency is an important but also well-known feature of bog-standard theory.

I. Background on Newcomb

(You can skip this section if you’re already familiar.)

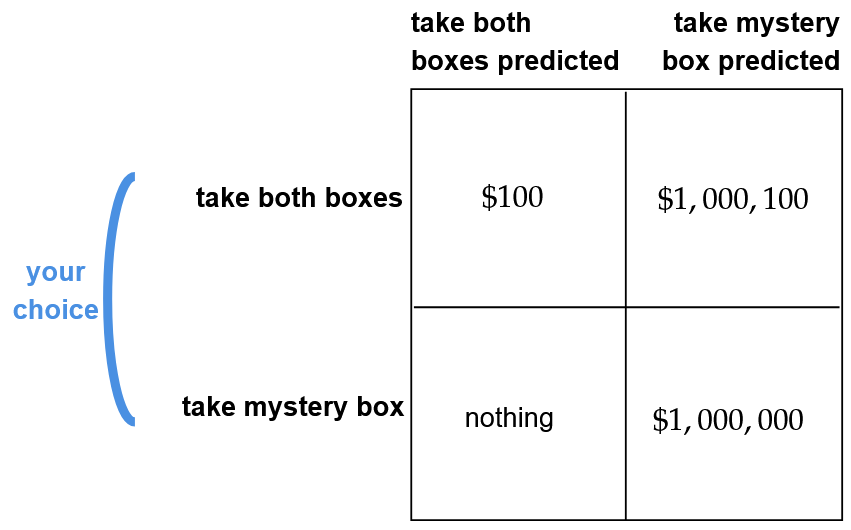

Newcomb’s problem is a favorite thought experiment for philosophers of a certain bent and for philosophically-inclined decision theorists (hi). The problem is the following:

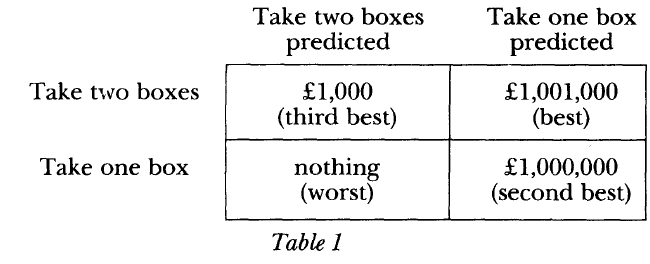

As Robert Nozick famously put it, “To almost everyone, it is perfectly clear and obvious what should be done. The difficulty is that these people seem to divide almost evenly on the problem, with large numbers thinking that the opposing half is just being silly.”

The argument for taking both boxes goes like, ‘If there’s a million dollars already in the mystery box, well then I’m better off taking both and getting a million plus a hundred. If there’s nothing in the mystery box, I’m better off taking both and at least getting the hundred bucks. So either way I’m better off taking both boxes!’

The argument for “one-boxing” – taking only the one mystery box – goes like, ‘If I only take the mystery box, the prediction machine forecasted I would do this, and so I’ll almost certainly get the million dollars. So I should only take the mystery box!’

II. The critique of expected utility theory

It’s often argued that standard decision theory would have you “two-box”, but that since ‘you win more’ by one-boxing, we ought to develop a new form of decision theory (EDT/UDT/TDT/LDT/FDT/...) that prescribes you should one-box.

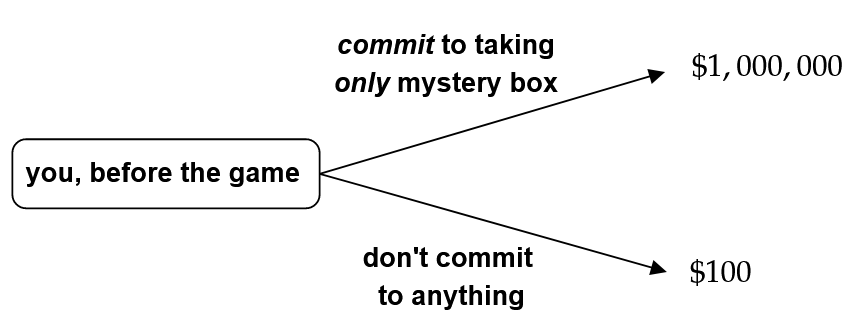

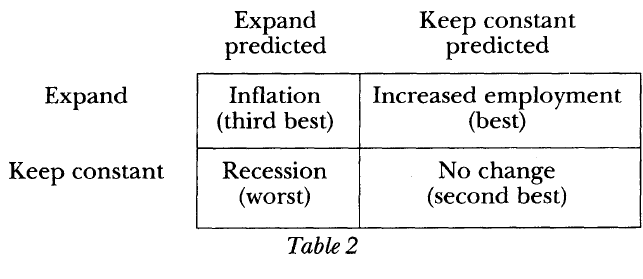

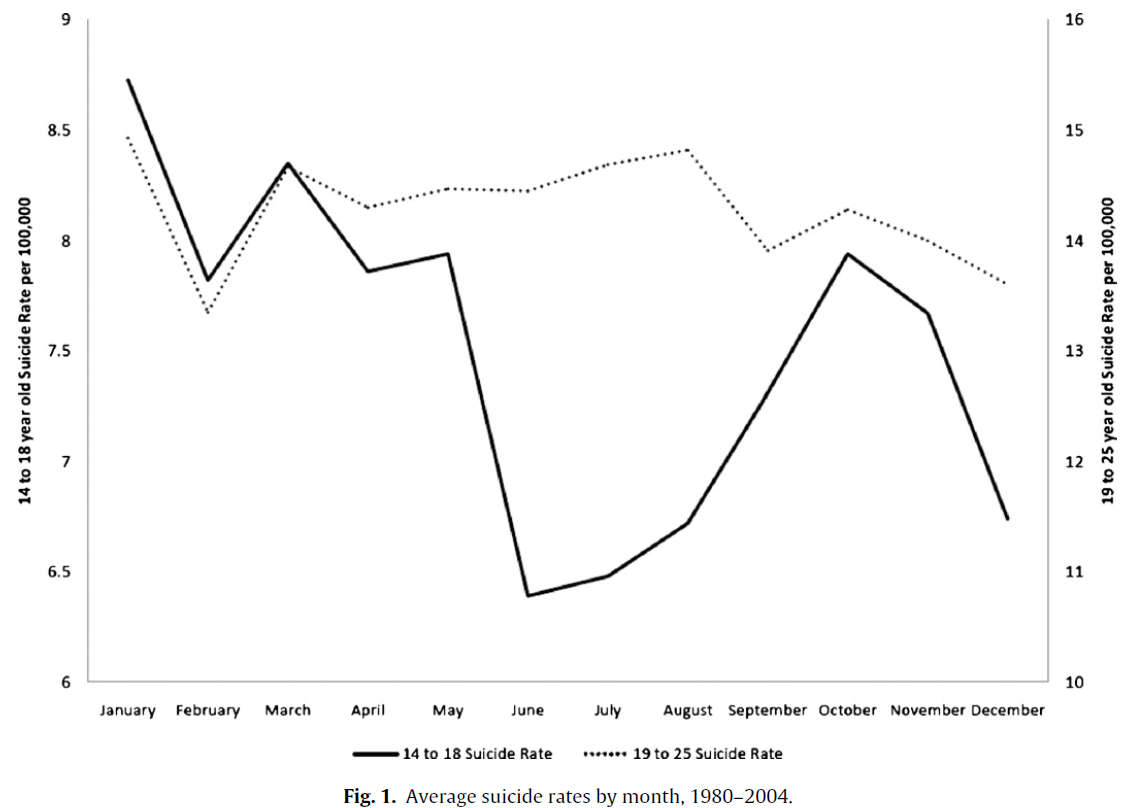

My claim is essentially: Newcomb’s problem needs to be specified more precisely, and once done so, standard decision theory correctly implies you could one- or two-box, depending on from which point in time the question is being asked.